The internet is at an inflection point. The platforms have cemented their power, generative AI and associated financial pressures are pushing companies to further degrade the online experience, and more than anything else, the notion that democratic governments should leave the internet alone is rapidly breaking down. Nothing shows that more than the recent arrest of Telegram CEO Pavel Durov in France and the suspension of Twitter/X in Brazil.

Make no mistake, governments’ stance on the internet has been changing for some time. Beyond the actions of French authorities and the Brazilian Supreme Court, Australia continues to try to craft a new framework for the internet that works for their society, Canada has advanced regulations of its own, with an Online Harms Act making its way through parliament, and the European Union arguably kicked off this whole movement in the first place. But as the United States hypocritically starts throwing up barriers of its own to try to protect Silicon Valley from Chinese competition, other countries see an opening to ensure what happens online better aligns with their domestic values, instead of those imposed from the United States.

This movement is more widespread than it might otherwise appear. Last month, the Global Digital Justice Forum, a group of civil society groups, published a letter about the ongoing negotiations over the United Nations’ Global Digital Compact. “It is eminently clear that the cyberlibertarian vision of yesteryears is at the root of the myriad problems confronting global digital governance today,” the group wrote. “Governments are needed in the digital space not only to tackle harm or abuse. They have a positive role to play in fulfilling a gamut of human rights for inclusive, equitable, and flourishing digital societies.” In most of the world, that isn’t a controversial statement, but it’s one that challenges the foundational ideas that emerged from the United States and have shaped the dominant approach to internet politics for several decades.

How internet politics were poisoned

In the 1990s, as the internet was being commercialized, cyberlibertarians grabbed the microphone and framed how many advocates would understand the online space for years to come. Despite the internet having been developed with military and government funds, cyberlibertarians treated the government as the enemy. “You are not welcome among us,” wrote Electronic Frontier Foundation (EFF) co-founder John Perry Barlow in his Declaration of the Independence of Cyberspace. “You have no sovereignty where we gather.” It was surely a welcome message to the global elite gathered at the World Economic Forum in 1996, where he published his manifesto. Governments, not corporations, were the great threat. That blind spot helped fuel the creation of the digital dystopia we now live in.

The cyberlibertarian approach that emerged out of the United States isn’t particularly surprising. The political dynamic in the United States has a stronger libertarian bent than in many other countries, especially the high-income Western countries it’s usually compared to. Digital politics in California had already integrated libertarianism and neoliberalism, so it wasn’t a big jump for it to define the approach to the internet. “The California Ideology is a mix of cybernetics, free market economics, and counter-culture libertarianism,” wrote Richard Barbrook and Andy Cameron in 1995. They described it as a “profoundly anti-statist dogma” that resulted from “the failure of renewal in the USA during the late ‘60s and early ‘70s.”

That perspective began being championed by publications like Wired and digital rights groups like the EFF, but the corporate sector along with Democratic and Republican politicians found a lot to like too. In the late 1980s, then-Senator Al Gore was laying out how he saw “high-performance computing” as a tool of American power on the world stage, while Newt Gingrich embraced the internet when he became Speaker of the House in 1995. Despite being positioned as an approach that prioritized internet users, cyberlibertarianism was very friendly to the corporate interests that wanted to control the internet and shape it to maximize their profits.

The digital rights movement’s focus on privacy and speech occasionally found it running up against the nascent internet companies, but more often they found themselves on the same side of the fight — whether it was against government regulation or traditional competitors that new internet companies wanted to usurp (and ultimately replace). Cyberlibertarians and the digital rights movement that grew out of it championed the notion that tech companies were exceptional; that traditional means of assessing communications and media technologies were no longer valid and that traditional rules couldn’t apply to these new companies. It was a gift to the rising internet companies; one that has created a lot of problems as we try to properly regulate them and rein them in today.

Traditionally, media and communications sectors were subject to strict rules, including expectations of a certain amount of domestic ownership like the United States has with its broadcasters or regulation of the type of programming or advertising that could be shown. In many countries, there was also public ownership as a bulwark against the private sector, such as with public broadcasters. The neoliberal turn had already started to change some of that, but with the internet it was all out the window; it had to be left to the private sector, with minimal regulation. If American tech companies that got a head start and easier access to capital dominated other countries’ internet sectors, their governments simply had to accept it, or else they would feel the combined pressure of company lobbyists, US diplomats, and digital rights groups that claimed such regulations were an inherent violation.

Probably one of the best examples of this dynamic is the copyright fight. For years, record labels, entertainment companies, and book publishers had pushed to increase copyright terms and worsen the terms of the deals given to artists. When file sharing came along, those companies worked with government to attempt a massive crackdown, but it wasn’t hard to turn public sentiment against them as it became incredibly easy to get access to far more music and media than people could ever imagine. Anti-copyright campaigners sided with the tech companies that wanted to violate the copyrights held by those traditional firms instead of trying to find a middle ground, even defending Google when it began scanning millions of books as part of its Google Books project. At the time, it was very much a David vs Goliath situation, with the tech companies in the position of David. But that fight — and many others like it — helped enable the growth of tech companies into the monopolists they are today.

“Don’t be evil” has long been jettisoned at Google and beyond in favor of a “move fast and break things” approach. They want to increase their power and grow their wealth at any cost, and are driven to do so just like any other capitalist company. They are not unique in that, but for a long time their public relations teams successfully convinced people otherwise. Parts of the digital rights movement have evolved in recognition of that, paying closer attention to the economic and political power the companies wield, yet even then it too often becomes narrowly focused on competition policy. The organizations most responsible for this approach have never made amends for their role in helping empower tech companies to cause the many harms they do today. In some cases, they still go to bat for them.

Prominent digital rights groups defended the scam-laden crypto industry several years ago, even taking money from crypto and Web3 groups to fund their efforts, and now claim that when OpenAI, Google, or Meta steal any content they can get their hands on — from artists, writers, news organizations, or social media users (which is basically all of us) — those actions should be considered fair use. In short, some of the most powerful companies in the world should have no obligation to compensate or get permission from the people who made the posts or created the works because that would threaten the cyberlibertarian ideals they’ve built their worldview on.

Cyberlibertarianism helps Silicon Valley

As we move into a period where regulation of digital technology and actions against major tech companies are becoming the norm, disingenuous opposition from industry lobbyists and some digital rights activists alike has become all too common. With crypto, they often argued they weren’t supporting the scams, but the idea of decentralization, even though, in practice, they were indeed defending a technology that was being commercialized as scam tech. A similar tactic is playing out with the defense of generative AI companies, where advocates arguing that stealing everyone’s work should be considered fair use say they’re not defending generative AI itself, but if they don’t defend the mass theft companies are engaging in then the entire practice of scraping would be in jeopardy.

Those arguments are intentionally overbroad and inherently deceptive. They make it seem like the entire foundation of the internet itself is at risk, playing on the libertarian reflexes within the tech community and taking advantage of the broader public’s lack of technical knowledge about how they internet works. But they’re exaggerations that serve the tech companies at the end of the day and have become commonplace in the opposition to efforts to rein in Silicon Valley’s power.

There are many examples of it. When Australia and Canada moved forward with legislation to force Google and Meta to bargain with news publishers so some of their enormous digital ad profits would go to local journalism, the cyberlibertarian response was to claim that the countries were implementing a “link tax” that would threaten one of the foundational aspects of the web: the hyperlinking between different web pages. Yet, while politicians and legislation often referred to the fact that the platforms do link to news articles, they never sought to actually put a price on links. In practice, the focus on links was rhetorical — a way to explain their plans to the public — with the ultimate goal being to force tech companies to sit down with news publishers and make a deal.

A similar process played out when Canada followed several European countries in regulating streaming platforms, something Australia is planning to do and the UK is investigating as well. The law forces foreign platforms like Netflix or Prime Video to commit to funding local content production and displaying a certain amount of Canadian content to users, as Canadian broadcasters have long been expected to do. Not only was this framed as a tax that would be passed onto consumers, but prominent digital rights campaigners picked up industry talking points that the legislation wouldn’t just apply to streaming companies, but to independent content creators on platforms like YouTube or TikTok — despite the government and the media regulator being clear that was not their plan. That fueled a deceptive news cycle and even got some online creators to publicly oppose the bill based on false information. As usual with cyberlibertarian approaches, the honest statements of government couldn’t be trusted.

The arrest of Durov and suspension of Twitter/X also bring the issues of privacy and speech into the spotlight. For years, these have been the central focus of digital rights campaigns, yet framing the internet through those lenses leads to a specific understanding of the problem — one that positions the government as the central threat. That approach is based on an inherently American perspective, coming out of how the US First Amendment frames free speech, rather than the understanding of free expression in many other countries that acknowledges the role of government to intervene on speech that threatens the broader society — which is exactly what the Brazilian Supreme Court is doing. There has been minimal outrage over the suspension of Twitter/X outside the right-wing echo chamber, which I would argue is a result of the hatred that’s developed for Elon Musk outside that circle. In any other case, the banning of a social media platform seems like the kind of case digital rights groups would jump on.

Telegram is another case entirely. For months before Durov was arrested, French authorities who specialized in investigating child abuse were collecting evidence of child predators using the platform to communicate with children, convincing them to make explicit images of themselves, and bragging about their abuse of children with other predators. Police tried to get Telegram to act, but it ignored the requests — to such a degree that the company until recently bragged on its website of not responding to authorities. Unsurprisingly, the police sought an arrest warrant for the chief executive and when he landed in France, they arrested him.

While some commentators have tried to frame the arrest as a speech issue, many privacy advocates have tended to ignore the substance of the case to narrowly focus on the fact that two of Durov’s charges fall under an obscure 2004 French law that requires companies distributing encryption technology to declare it. It’s not a surprise why: debating the issue of whether child predators and other criminals should be able to freely use these services is an uncomfortable one for them, because they explicitly argue in favor of it. The cyberlibertarian argument is that all communications must be encrypted to protect them from the governments they perceive as such a significant threat, and that means allowing the dregs of society to use them in criminal ways too; something the vast majority of the public would surely disagree with.

It’s an argument that once again treats digital technology and the internet as an exception where traditional norms cannot apply — particularly the fact that authorities have long been able to get warrants to search people’s mail, wiretap their phones, or obtain their text messages. That’s the trade off we’ve collectively made, and one that the vast majority of people have never seen as a threat to their rights, freedoms, or liberty — because they’re not libertarians. The push for encryption also sets up an arms race, forcing authorities to seek out even more intrusive methods to identify criminals and collect necessary evidence, including procuring software that compromises devices themselves similar to NSO Group’s Pegasus spyware. But once those tools exist, they can be obtained by many other groups that don’t have to follow the rules in democratic countries and use them against a much wider swath of people.

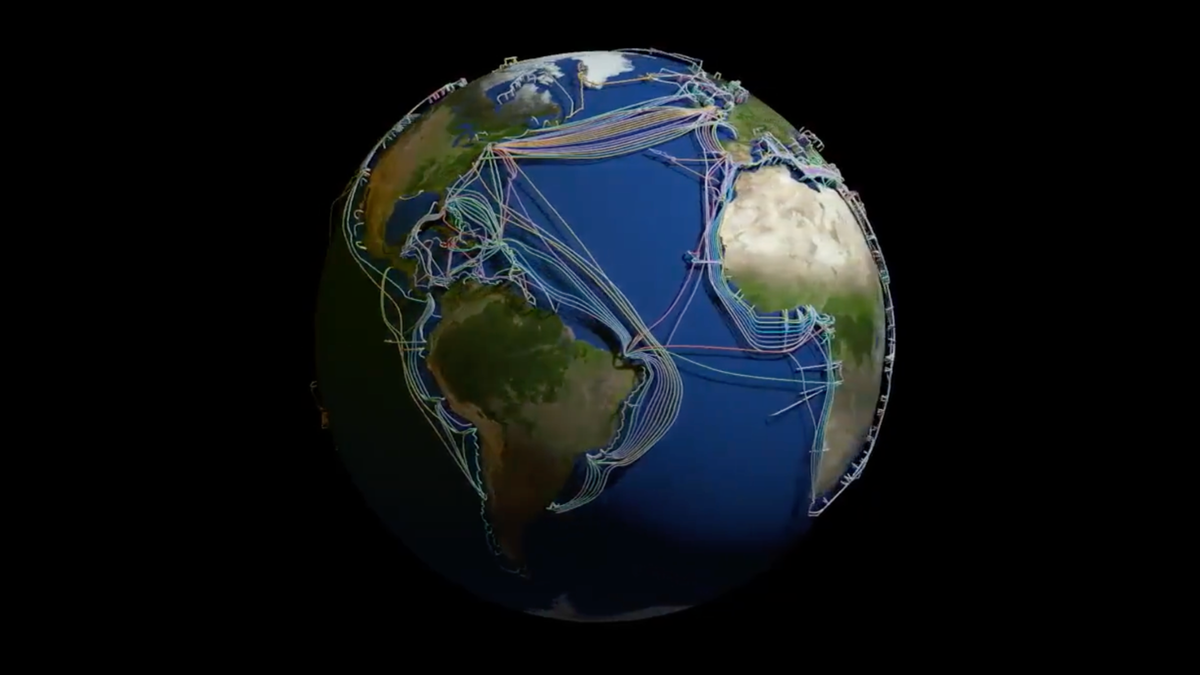

It’s also quite an ironic stance. The vast surveillance apparatus these campaigners decry is often not one owned and controlled by government. In fact, it was developed and rolled out by the private companies cyberlibertarians championed up until very recently, and sometimes still find themselves defending. The internet has enabled the creation of the most intrusive and comprehensive global surveillance system in the history of humanity, as companies developed business models based on mass data collection to shape advertising and other means of targeting users. It’s an infrastructure that has increasingly moved into physical space as well, and one that everyone from hackers to intelligence agencies have been able to use to all manner of nefarious ends.

This internet, where corporate power was a lesser concern than government, was supposed to deliver “a civilization of the Mind in Cyberspace” that would turn out to be “more humane and fair than the world your governments have made before,” as Barlow put it in 1996. But that vision was compromised by its blind spots and exclusions — hinderances that are still at central to how many people see the internet. Writing about Barlow in 2018, journalist April Glaser wondered what might have been if another approach had inspired the past two decades of internet politics. “I can’t help but ask what might have happened had the pioneers of the open web given us a different vision,” she wrote, “one that paired the insistence that we must defend cyberspace with a concern for justice, human rights, and open creativity, and not primarily personal liberty.” We’ll never know what could have been, but we can still jettison that perspective from our fights over the internet moving forward.

Embracing digital sovereignty

For a long time, it was hard to push back against an understanding of the internet framed through an individualist and anti-statist cyberlibertarian lens, even as a particular version of digital technology was being pushing on the world by a hegemonic United States to benefit its growing internet companies — and by extension its own global power. American politicians were not shy about that fact, but it largely escape the digital rights movement — particularly its leading organizations in the United States — whose narrow obsession with privacy and an American interpretation of free speech also set the mold for how groups in other countries understood digital communication. But with US dominance no longer guaranteed and people around the world getting fed up with the abuses of major tech companies, there’s an opportunity to carve out a new approach to the internet.

Instead of solely fighting for digital rights, it’s time to expand that focus to digital sovereignty that considers not just privacy and speech, but the political economy of the internet and the rights of people in different countries to carve out their own visions for their digital futures that don’t align with a cyberlibertarian approach. When we look at the internet today, the primary threat we face comes from massive corporations and the billionaires that control them, and they can only be effectively challenged by wielding the power of government to push back on them. Ultimately, rights are about power, and ceding the power of the state to right-wing, anti-democratic forces is a recipe for disaster, not for the achievement of a libertarian digital utopia. We need to be on guard for when governments overstep, but the kneejerk opposition to internet regulation and disingenuous criticism that comes from some digital rights groups do us no good.

The actions of France and Brazil do have implications for speech, particularly in the case of Twitter/X, but sometimes those restrictions are justified — whether it’s placing stricter rules on what content is allowable on social media platforms, limiting when platforms can knowingly ignore criminal activity, and even banning platforms outright for breaching a country’s local rules. We’re entering a period where internet restrictions can’t just be easily dismissed as abusive actions taken by authoritarian governments, but one where they’re implemented by democratic states with the support of voting publics that are fed up with the reality of what the internet has become. They have no time for cyberlibertarian fantasies.

Counter to the suggestions that come out of the United States, the Chinese model is not the only alternative to Silicon Valley’s continued dominance. There is an opportunity to chart a course that rejects both, along with the pressures for surveillance, profit, and control that drive their growth and expansion. Those geopolitical rivals are a threat to any alternative vision that rejects the existing neo-colonial model of digital technology in favor of one that gives countries authority over the digital domain and the ability for their citizens to consider what tech innovation for the public good could look like. Digital sovereignty will look quite different from the digital world we’ve come to expect, but if the internet has any hope for a future, it’s a path we must fight to be allowed to take.