Making big public statements is always fun and people who think themselves to be important love doing it as a way of trying to influence public opinion and/or politics. They are a way for institutions and individuals to organize and try to shine some light onto important issues.

We’ve seen many such things in the AI space, one of the more ludicrous examples being the big push to pause “AI” development (signed by a bunch of billionaires developing “AI”). With “AI” having sucked up all the air in the room what else could we declare things about?

Usually those declarations have a very short shelf-life, don’t really do much and are mostly harmless.

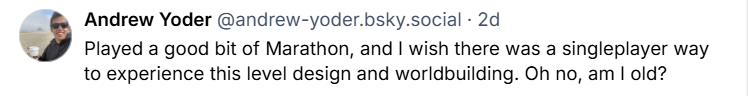

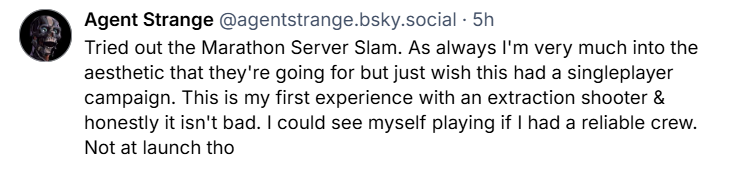

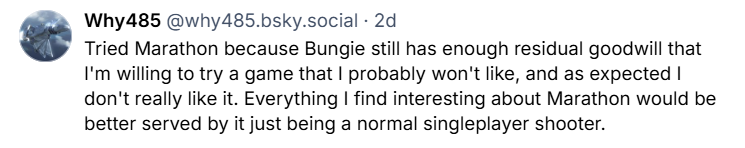

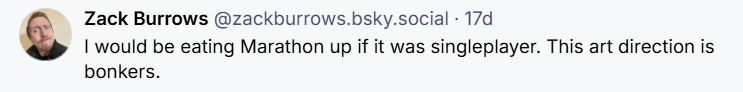

So there is a new declaration in town called the “The Pro-Human AI Declaration“. And before we go into the details, let’s look at who is pushing this for a second because the declaration keeps talking about how broad their coalition is.

And boy is that tent big. It’s big enough to proudly list Steve Bannon, one of the architects of the new wave of fascism as its second individual supporter. So it’s a declaration white nationalists and fascists can get on board with. Glenn Beck, another right wing talking head was also happy to put his name there.

But it’s not just right-wing grifters and fascists: Yoshua Bengio, Stuart Russell and many other academics from the field of computer science, they also got that part covered. Richard Branson of the Virgin Group is probably one of the bigger corporate names, there’s SAG-AFTRA leadership and a whole lot of religious organizations all sharing the stage with … well fascists.

There also is a lot of organizational support: Again SAG-AFTRA is joined by a whole bunch of ethical “AI” groups, religious groups and of course the Center for Humane Technology, known to jump on every bandwaggon that gets Tristan Harris some airtime. It’s also a vehicle for the Future of Life Institute, a longtermist secular church based on eugenic thinking. The Future of Life Institute shouldn’t be touched with a ten foot pole by anyone interested in actual human flourishing: FLI doesn’t care about you or anyone, they want to make sure that future digital beings somehow get spawned and their bit should be happy. They would be ridiculous if they didn’t weasel themselves into the discussion about “AI” and other technologies. Like they got themselves onto this declaration together with a bunch of fascists.

But maybe they case is just too good. Too important. I wrote about being able to form alliances lately, after all, didn’t I. (Just a quick note on that: There can never be an alliance with fascists. It doesn’t matter what they ask for. What they fight for. All you do with fascists is anything to stop them by all means necessary.)

So what are the important statements here? They are not too many so let’s have a quick look, shall we?

1. Keeping Humans in Charge

Human Control Is Non-Negotiable: Humanity must remain in control. Humans should choose how and whether to delegate decisions to AI systems.

Meaningful Human Control: Humans should have authority and capacity to understand, guide, proscribe, and override AI systems.

No Superintelligence Race: Development of superintelligence should be prohibited until there is broad scientific consensus that it can be done safely and controllably, and there is strong public buy-in.

Off-Switch: Powerful AI systems must have mechanisms that allow human operators to promptly shut them down.

No Reckless Architectures: AI systems must not be designed so that they can self-replicate, autonomously self-improve, resist shutdown, or control weapons of mass destruction.

Independent Oversight: Highly autonomous AI systems where controllability is not obvious require pre-development review and independent oversight: genuine authority to understand, prohibit, and override, not industry self-regulation.

Capability Honesty: AI companies must provide clear, accurate and honest representations of their systems’ capabilities and limitations.

Ahh the good old “human in the loop” shebang. Or as we who have lived in this world call it: The “take the fall for the decisions an automated machine made” trick.

There’s not much to this: “Humans” must be in control. What this omits is the question of which humans. Say today a few people (basically all of them very rich men) who are considered human are in control. Is that what they mean? Because sure as shit I am not. Maybe you are, I don’t know you. The claim that “humans” need control pretends that we are all one homogeneous mass but there are differences in power and access. Every monstrosity humanity did was under human control. Human control feels great because everyone reading it thinks it means them. It does not.

Then there’s the Sci-Fi angle. Nobody is allowed to build a machine god and it needs an off-switch so we can kill it before [INSERT RANDOM SCI FI MOVIE REFERENCE]. Sure whatever. We could write the same passage about rabit Unicorns because we have basically the same ability to bring those to life than we have with “Superintelligence”. The “self-replicating” thing goes into the same direction. This is just science fiction references to make everyone feel afraid. But that’s not real. It’s not addressing any actual harms that “AI” systems do – which there are many of – and shifts the whole discourse to talking about nothing really. Sure I also watched The Matrix, it was a cool movie. But we shouldn’t base our politics on it (except for understanding hat trans rights are human rights of course!).

I am a bit more charitable with the last two aspects: Sure oversight is good. All companies need way more oversight. But not by setting up a comfy oversight board for a few handpicked researchers, investors and activists who get flown to nice meetings debating whatever. Actual oversight needs teeth, needs the willingness to use them. And demanding that companies must be honest would be neat. Good luck with an “AI” sector where basically every CEO is a compulsive liar. But sure.

2. Avoiding Concentration of Power

No AI Monopolies: AI monopolies that concentrate power, stifle innovation, and imperil entrepreneurship must be avoided.

Shared Prosperity: The benefits and economic prosperity created by AI should be shared broadly.

No Corporate Welfare: AI corporations should not be exempted from regulatory oversight or receive government bailouts.

Genuine Value Creation: AI development should prioritize solving real problems and creating authentic value.

Democratic Authority Over Major Transitions: Decisions about AI’s role in transforming work, society, and civic life require democratic support, not unilateral corporate or government decree.

Avoid Societal Lock-In: AI development must not severely limit humanity’s future options or irreversibly limit our agency over our future.

This one is fun. Because if we took it seriously this would be the demand for democratic socialism or even democratic communism. Which I am totally on board with. But that’s probably not what these folks are aiming for.

TBH the fact that all these paragraphs add “AI” is cute because if you strip it out of the text this is a list of demands to reign in the tech sector and corporations in general. But that’s not on the agenda.

“No AI monopolies”. So other monopolies are okay? “Genuine Value Creation” and “solving real problems”. Yeah sure. But that also applies to fucking Microsoft or whoever invented vapes.

This whole part is pointing out things that are wrong with capitalism. Welcome comrades (no, not you Steve Bannon and your fascist buddies).

But it’s very explicitly not saying that. Because this is “AI”. “AI” is special. All the things labeled as problematic here happen every day. It has absolutely nothing to do with “AI”. This is just to get some buy in by connecting the actual experience of people having to live in late stage capitalism somehow to “AI”. But the thing breaking us is capitalism.

Another question: Who gets to decide what “genuine value” is? The dude who invented vapes can point at how he’s making all that money and how it creates economic activity. Is the advertising industry “creating authentic value”? What does that even mean? Are they arguing for full democratic control of the economy? Again: Big fan, let’s go, but in their reading is that not a contradiction to not “stifle innovation, and imperil entrepreneurship”?

You gotta pick one: Democratic control or unchained innovation and markets. Those things have more tension than me reading that smart and famous people sign a declaration with Steve fucking Bannon.

- Protecting the Human Experience

Defense of Family and Community Bonds: AI should not supplant the foundational relationships that give life meaning—family, friendship, faith communities, and local connections.

Child Protection: Companies must not be allowed to exploit children or undermine their wellbeing with AI interactions creating emotional attachment or leverage.

Right to Grow: AI companies should not be allowed to stunt children’s physical, mental or social growth or deprive them of essential experiences for healthy development during critical periods.

Pre-Deployment Safety Testing: Like drugs, chatbots must undergo pre-deployment testing for increased suicidal ideation, exacerbation of mental health disorders, escalation of acute crisis situations, and other known harms.

Bot-or-Not Labeling: AI-generated content that could reasonably be mistaken for human-generated must be clearly labeled as such.

No Deceptive Identity: AI should clearly and correctly identify itself as artificial, nonhuman, and not a professional, and it should not claim experiences it lacks.

No Behavioral Addiction: AIs should not cause addiction or compulsive use through manipulation, sycophantic validation, or attachment formation.

I could almost repeat what I wrote about the last segment: Yeah sure, but this has very little to do with “AI”. “AI” is built to create an addiction/dependency but most other digital systems at least try to do that, too. Sure, with “AI” it’s not longer Meta who is your dealer but OpenAI but in the end both companies see you just as resource to exploit. Nothing “AI” here.

Yes, companies should not build systems exploiting children. Who’s with me burning Roblox to the ground or every free to play game in existence?

But there is something where I can calm the signatories (who all thought that putting their name next to Steve Bannon was a good idea): The idea that “AI” systems need to be labeled is already the law in the EU. It’s interesting though that their demands are way less strict that existing laws: A bot only needs to label its output if it could be mistaken as human. So a bot making hiring decisions in some backend doesn’t need to be disclosed? That’s super weak.

And just a small nitpick here: “it should not claim experience it lacks”: No “AI” has any experience. They are buckets of floating point numbers with delusions of grandeur. Living beings existing in the world have experiences. Numbers do not.

4. Human Agency and Liberty

No AI Personhood: AI systems must not be granted legal personhood, and AI systems should not be designed such that they deserve personhood.

Trustworthiness: AI must be transparent, accountable, reliable, and free from perverse private or authoritarian interests.

Liberty: AI must not curtail individual liberty, freedom of speech, religious practice, or association.

Data Rights and Privacy: People should have power over their personal data, with rights to access, correct, and delete it from active systems, AI training sets, and derived inferences.

Psychological Privacy: AI should not be allowed to exploit data about the mental or emotional states of users.

Avoiding Enfeeblement: AI systems should be designed to empower, rather than enfeeble their users.

I also think that “AI” systems deserve no personhood. And since I believe that “the corporation” is for all intends and purposes one of the first forms of “AI” we should strip them of a lot of person rights that we have given them. Who’s with me? You cannot say that “AI” shouldn’t get personhood and then claim that corporations can. Personhood is for people. But that’s me being radical again.

Now I don’t know where “perverse” found its way into this document (probably one of the many, many, many religious groups involved here) but it surely reads a bit as if they are afraid that their “AI” might be too queer. But maybe I am too uncharitable here.

“AI” is supposed to be accountable. But who is accountable? A person is accountable for their actions. But we already said that “AI” is not a person. What does it mean for an “AI” to be “accountable” then?

We go on with “free speech”. Of course. But which understanding of that? In Germany denying the existence of the holocaust is illegal. Should your “AI” deny the existence of the holocaust? For freeze peach?

But it’s cute that under “data rights and privacy” they added a claim for GDPR.

“Psychological privacy”: Here’s the kicker. “AI” doesn’t exploit. The people who built and run it, do. But you do not talk about them.

“Avoiding enfeeblement”: Yeah big fan of that. But if you look at studies about cognitive offloading and its effects if you actually mean it, you can join me and my army of friends of Ned Ludd burning down a whole bunch of data centers. “AI”s are built to take your agency and capability.

5. Responsibility and Accountability for AI Companies

No Liability Shield: AI must not be able to act as a liability shield, preventing those deploying it from being legally responsible for their actions.

Developer Liability: Developers and deployers bear legal liability for defects, misrepresentation of capabilities, and inadequate safety controls, with statutes of limitation that account for harms emerging over time.

Personal Liability: There should be criminal penalties for executives responsible for prohibited child-targeted systems or ones causing catastrophic harm.

Independent Safety Standards: AI development shall be governed by independent safety standards and rigorous oversight.

No Regulatory Capture: AI companies must not be allowed undue influence over rules that govern them.

Failure Transparency: If an AI system causes harm, it should be possible to ascertain why as well as who is responsible.

AI Loyalty: AI systems performing functions in professions with fiduciary duties, such as health, finance, law, or therapy, must fulfill all of those duties, including mandated reporting, duty of care, conflict of interest disclosure, and informed consent.

Now. Here’s a surprise: I agree with a lot of what is written here. I have actually written about “that asterisk” before. We accept a level of “AI” companies delivering absolute garbage and putting it on us to “check” it. We would not accept that dynamic anywhere else. If I go to the supermarket to buy milk and all cartons had a post-it stuck to them saying “might be full of rat poison, you better check”, that store would just be closed.

“AI” currently is in the status of a supermarket that doesn’t know (and doesn’t care enough to check) if it’s delivering rat poison or not. Awesome.

I agree that “AI” companies should be liable for their product. I also think that people deploying and integrating these products should be liable.

And I also agree that we mustn’t let “AI” companies write or influence legislation.

This is the least bad part of the document. (You know the document that fascists also support and signed and respectable people not having a problem with that.)

The document itself isn’t that interesting. A bunch of half-assed, a bit contradicting demands that read a bit as if a chatbot helped writing them. A whole bunch of problems being correctly identified and then quickly attributed to “AI” instead of capitalism. Sure, it’s hard to challenge one’s beliefs but still: Aggregating this should have lead to some insight and thinking. Unless … this was written with a chatbot, right?

More interesting is definitely who signed it. Who thought that it was good to integrate fascists and white nationalists whose whole aim is to destroy the rule-based order that the world used to be in.

We see many organizations trying to show their relevance by being on this – sorta empty – paper. But they are also legitimizing a lot of problematic stuff here – The Fascists and The Future of Life Institute being just the first things that caught my eye and I am scared to look into the religious organizations and all the “ethical AI” orgs because usually when you start poking around something filthy comes up.

The whole document and activity is based on the assumption that “AI” is special. Needs special rules. Special approaches. But that ain’t true.

We need to regulate tech. Need to regulate how it is being used against us, our wellbeing, our rights in this world. But it doesn’t matter if the abuse commited by corporations and governments is done through a stochastic model, some other form of automation or just people: “AI”s mustn’t stifle our development and freedoms? Yeah, neither should people.

The grown up approach to regulating tech does not lie in building special rules for special technologies. That’s a sucker’s game that tech loves. Because it can drown the discourse in jargon and get a large influence because “only tech bros understand that stuff”. Fuck that.

Regulate outcomes. The tech doesn’t matter. For the person hurt the tool that was used to hurt them isn’t that relevant. We need to make sure that we are reducing, ideally eliminating the hurt.

Coda: I can’t get over how the whole document basically argues for democratic socialism/communism and the abolishment of capitalism. Looking at the organizations who pushed it and probably wrote most of it it shows a lack of self-awareness and critical thinking that is kinda cute. Like a baby right before it learns object permanence.