Think About Supporting Garbage Day!

It’s $5 a month or $45 a year and you get Discord access, the coveted weekend issue, and monthly trend reports. What a bargain! Hit the button below to find out more.

The Manosphere’s Victory Lap

There’s been a lot of ink spilled over the last few months attempting to explain what exactly happened to America. Possibly even more than the last time this happened, 10 years ago. Because unlike 2016, it doesn’t feel like fascism is some kind of invasive force seeping in from the dark corners of web, but, now, instead, it has become the air we breathe, like cultural microplastics. 4chan slang, red pill ideology, and Substacker race science has become so prevalent that even when you try and avoid it, your behavior is still defined in opposition to it.

And while there are a myriad of ways this amorphous world of hypermasculine fascism arose, there is one chief architect. A man who has spent years connecting the various nodes of comedy, sports, influencers, platforms, and politicians that power it. And that man is Dana White.

White, the president and CEO of Ultimate Fighting Championship (UFC) and, as of January, a Meta board member, created the new masculinity underpinning Trump’s second administration. And, over the weekend, all of the spheres of influence that it touches came together to welcome podcasters and alleged human traffickers Andrew and Tristan Tate to Las Vegas. The event, UFC 313, was a victory lap for the newly energized manosphere. A showy display of power meant to prove that awful men never have to face consequences ever again.

(Photo by Ian Maule/Getty Images)

White bought UFC in 2001 after being, he claims, chased out of Boston by mobster Whitey Bulger. (Yeah, you and everyone’s uncle, Dana. Every fucking meathead in Boston born before 1985 tells some version of this same story). That’s when he hired podcaster Joe Rogan to be a commentator for UFC matches. That’s also when White met President Donald Trump, who, at the time, was hosting UFC matches at his venues in Atlantic City and Las Vegas. White would go on to officially endorse Trump during the 2016 Republican National Convention, telling the crowd, “Donald was the first guy that recognized the potential that we saw in the UFC and encouraged us to build our business.” It’s been memory-holed, but Republicans weren’t actually very excited about professional mixed martial arts at the time. Sen. John McCain once referred to it as “human cockfighting” lol.

Both White and Rogan got more vocal in their support for Trump during his first administration. Rogan always treated Trump as more of a fun character, while White told The Hill in 2018 that he and Trump had become even closer since Trump entered the White House. But the real turning point was during the pandemic. Which is when White connected with a team of YouTubers that may have been more instrumental than even Rogan in helping Trump get elected a second time.

The Nelk Boys are a group of American and Canadian YouTubers that started out making prank videos. In 2015, a few of them were arrested after telling cops that they had coke in their car (it was Coca-Cola). Around 2020, White was introduced to the Nelk Boys channel through his son, Dana Jr. During the pandemic, the Nelk Boys were flown out to UFC’s Fight Island in Abu Dhabi. And right before the election in that year, the Nelk Boys were invited on to Air Force One to shoot a video with Trump. He also went on their podcast Full Send after the election, but the episode was removed by YouTube because Trump couldn’t stop ranting about election interference. In 2022, Elon Musk made an appearance on Full Send and in 2023, Trump did another interview with them.

Last October, the Nelk Boys were instrumental in building a youth coalition for Trump’s campaign. They launched a voter registration program, threw a music festival, and bought ads on other bro-y podcasts, like Kill Tony, which records in Rogan’s comedy club, This Past Weekend w/ Theo Von, and BS w/ Jake Paul. If you woke up on November 6th and wondered where the hell all these guys came from, the answer is they’re very popular with young men on YouTube and are getting very rich from supporting Trump and, most importantly, supporting UFC.

Interestingly enough, White admitted in November that he kind of regrets going all in on Trump last year, telling The New Yorker, “I want nothing to do with this shit. It’s gross. It’s disgusting.” He’s happy Trump won, but it seems like stumping was a bit more involved than he expected. But I also think it’s an important detail here. The end goal for White has always been about enriching himself and UFC. One of the more insightful things I’ve read about White’s motives was actually a Reddit comment from a few years ago in a thread asking what the deal was with UFC and the Nelk Boys. “I think it's a strategic relationship on Dana's part (and the Nelk boys too, probably),” the user wrote. “He's knows they're extremely popular with young men and Dana wants to make sure UFC doesn't go the way of boxing; being viewed primarily by middle-aged/older men.”

Which is how White ended up personally greeting the Tate brothers in Vegas last weekend. Oh, apparently Mario Lopez is a fan of the Tates, as well, he was filmed saying hi. Also in attendance was Trump-appointed FBI Director Kash Patel, who wants to hire the UFC to train agents, and Mel Gibson. They fistbumped at one point during the match.

Like every other villain in Trump World, I think it’s important to not give White too much credit here. He’s not some kind of mastermind, but he has been instrumental it changing American masculinity, making it inseparable from conservatism and, of course, inseparable from the UFC brand. And now he and every other weird muscle man that comes to UFC fights are all aligned in their hatred of women and deep desire to feel masculine and powerful. A sea of, usually, very bald men in tight shirts that want to hurt the world and be celebrated for it. But if you drill further down into their ideology you’ll also find the same thing every time. Someone who is trying to get rich, has failed every thing they’ve tried, and realized that manipulating sad internet men was the easiest way to do it.

The following is a paid ad. If you’re interested in advertising, email me at ryan@garbageday.email and let’s talk. Thanks!

Make your details harder to find online

The most likely source of your personal data being littered across the web? Data brokers. They're using and selling your information - home address, Social Security Number, phone number and more. Incogni helps scrub this personal info from the web and gives you peace of mind to keep data brokers at bay. Protect yourself: Before the spam madness gets even worse, try Incogni. It takes three minutes to set up. Get your data off 200+ data brokers' and people-search sites automatically with Incogni. Incogni offers a full 30-day money-back guarantee if you're not happy ... But you will be.

Unlock 55% off annual plans with code DAYDEAL at checkout.

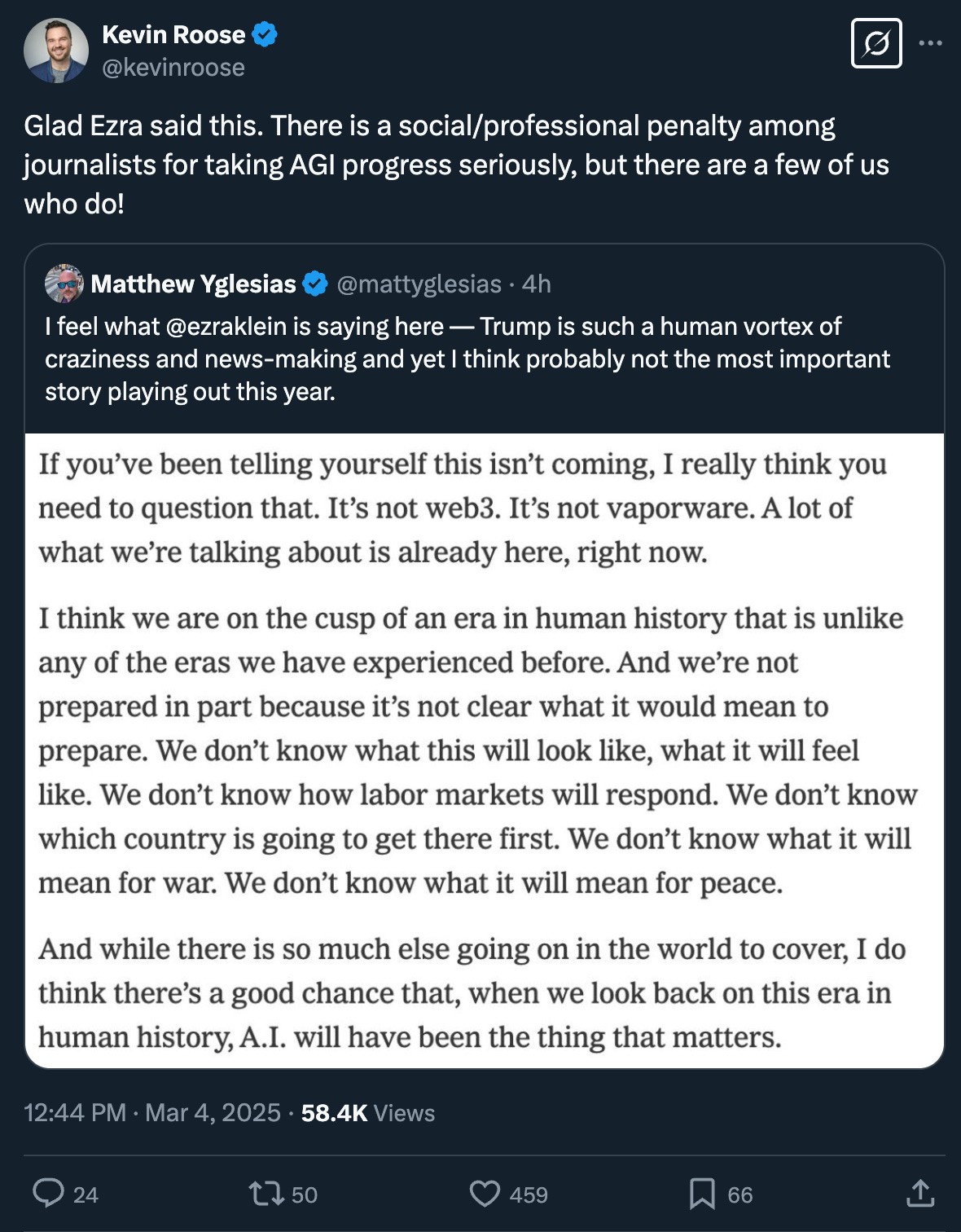

The Last Decade Of American Politics Summed Up In One Post

An Elon Musk Conspiracy Theory Possibly Confirmed

Vivian Wilson, Elon Musk’s transgender daughter, seemingly confirmed a theory that’s been floating around for the last few weeks about Musk. “My assigned sex at birth was a commodity that was bought and paid for. So when I was feminine as a child and then turned out to be transgender, I was going against the product that was sold,” she wrote on Threads yesterday. “That expectation of masculinity that I had to rebel against all my life was a monetary transaction.”

If you haven’t been following this, a graphic has been going around, claiming that all of Musk’s known children were assigned male at birth and that Musk was likely paying for sex-selective in vitro fertilization to ensure that he only had male children. Which would explain how quickly his politics shifted when Williams came out as trans in 2020. He has since disowned Williams and has told reporters he considers her dead.

Bernie’s On Tour

TUNE IN to our rally in Altoona, WI, where we discuss how we take on the greed of the billionaire class and create a government that works for all and not just the few.

— Bernie Sanders (@BernieSanders)

10:31 PM • Mar 9, 2025

Sen. Bernie Sanders did what every single — and I mean all of them — Democrat should have done two months ago, and hit the road on a national campaign against the Trump administration and DOGE. He is, so far, the only Democrat doing it. Though, there’s some chatter that Rep. Alexandria Ocasio-Cortez will be joining him soon and organizing her own events. A lot of readers have been emailing me asking what exactly the Democrats can do and, well, this is as good of an idea as any, frankly. The internet is flooded with fascist garbage (see above), so they should go outside, connect with actual people, and, in the process, make a lot of content they can flood the zone bacj with.

Also, click here for a wild video of a senior citizen that showed up to watch Sanders speak rocking a Hasan Piker shirt. Bands like The Armed and Laura Jane Grace have been opening for Sanders, which is also pretty cool. But it’s not just Sanders and AOC that are finally wrapping their heads around the current moment.

Majority Report host Sam Seder went on Jubilee, that YouTube channel that makes conservatives and liberals argue for internet traffic, and, honestly, knocked it out of the park. The video, in which he debates a bunch of young Republicans, is hard to watch, but I think he did a pretty good job. Definitely better than how Charlie Kirk did on his episode.

Ultimately, though, the Democrats have one real job right now. As TV writer Grace Freud wrote on X last week, “I have a new theory called The Guy theory of politics. No democrat is The Guy right now. They have no The Guy. You need a The Guy to even possibly confront Trump who has insane levels of The Guy-ness not seen since 2008 Obama.” Democrats need to find a Guy (not gender specific). And they need to find one fast.

JD Vance Tries To Get In On The Meme (And Fails)

Vice President JD Vance tried to get in on the rare vances meme. He, of course, shared one where he doesn’t look like a grotesque monster or gross little boy, opting, instead, to share one where he’s been photoshopped on to Leonardo DiCaprio. Elon Musk liked it, I guess. Don’t worry, though, someone fixed it. Either way, meme’s probably over now.

A Twitch-Streaming AI Keeps Trying To Kill Itself

Claude Plays Pokémon is a Twitch stream where the AI model Claude, developed by Anthropic, tries to play through Pokémon Red and Blue. And while it’s not as fun or exciting as Twitch Plays Pokémon, arguably one of the best things to ever happen online, it has been an interesting experiment.

The AI was instructed to name the Pokémon it caught and immediately started being more protective of them in battle once it had named them. It’s also very bad at navigating the map. It spent three days stuck in Cerulean City because it couldn’t figure out the hedges. And it’s currently stuck again in Mt. Moon. It’s been there a week and is now crashing out hard, implementing what it’s calling “the blackout strategy” to kill itself in-game, possibly as a way to get out of the dungeon.

It’s All Kicking Off On The Balatro Subreddit

The subreddit for the whacky poker game Balatro (which recently consumed my entire life) is in full meltdown mode right now. There are, apparently, a few issues at play. The first, and most important, is that the subreddit is very divided over AI art. Some users want to be able to post it, others do not.

The pseudonymous creator of the game, LocalThunk, for the record, is not a fan of AI art. They recently wrote on Bluesky, “I don’t condone AI-generated ‘art’.” One of the users on the subreddit who wanted to allow AI art, however, was a mod named u/DrTankHead, who also runs a Balatro erotica subreddit. Yeah, I know.

The horny AI evangelist mod responded to the controversy over on the Balatro erotica subreddit they’re still modding and wrote a big long thing about why they wanted AI art to be allowed so badly. “It is NOT my goal to make this place AI centric, I’m thinking it'll be a "day of the week" thing,” they wrote. “Where non-NSFW art that is made with AI can be posted. My goal is to be objective and keep the space safe. (Safe-For-Work Sunday's?)” The explanation didn’t really clear anything up and, honestly, makes all of this even weirder, but oh well.

It’s best to let this just sort of wash over you and never think about it again. But while we’re talking about Balatro, LocalThunk published a blog post this week all about how it was created, which is a great read.

Nunchuck Tyler

@nunchucktylor You just bumped into Nunchuck Tylor!? #fyp #fypシ #fypage #fypシ゚viral #viralvideo #viraltiktok #tiktok

Some Stray Links

“Trump honored a cancer survivor. The boy's doctors now face his budget cuts.”

“DHS Detains Palestinian Student from Columbia Encampment, Advocates Say”

P.S. here’s a good post about pasta.

***Any typos in this email are on purpose actually***