Huffing Gas Town, Pt. 2: If I Could, I Would Download Claude Into A Furby And Beat It To Death With A Hammer

Years ago, I decided I was going to cover the world of cryptocurrency with a fairly open mind. If you are part of an emerging tech industry, you should be very worried when I start doing this lol. Because it only took me a few weeks of using crypto, talking to people who work in the industry, and covering the daily developments of that world to end up with some very specific questions. And the answer to those questions boiled down to crypto being a technology that was, on some level, deeply evil or deeply stupid. Depending on how in on the scam you are.

While I don’t think AI, specifically the generative kind, is a one-to-one with crypto, it has one important similarity: It only succeeds if they can figure out a way to force the entire world to use it. I think there’s a word for that!

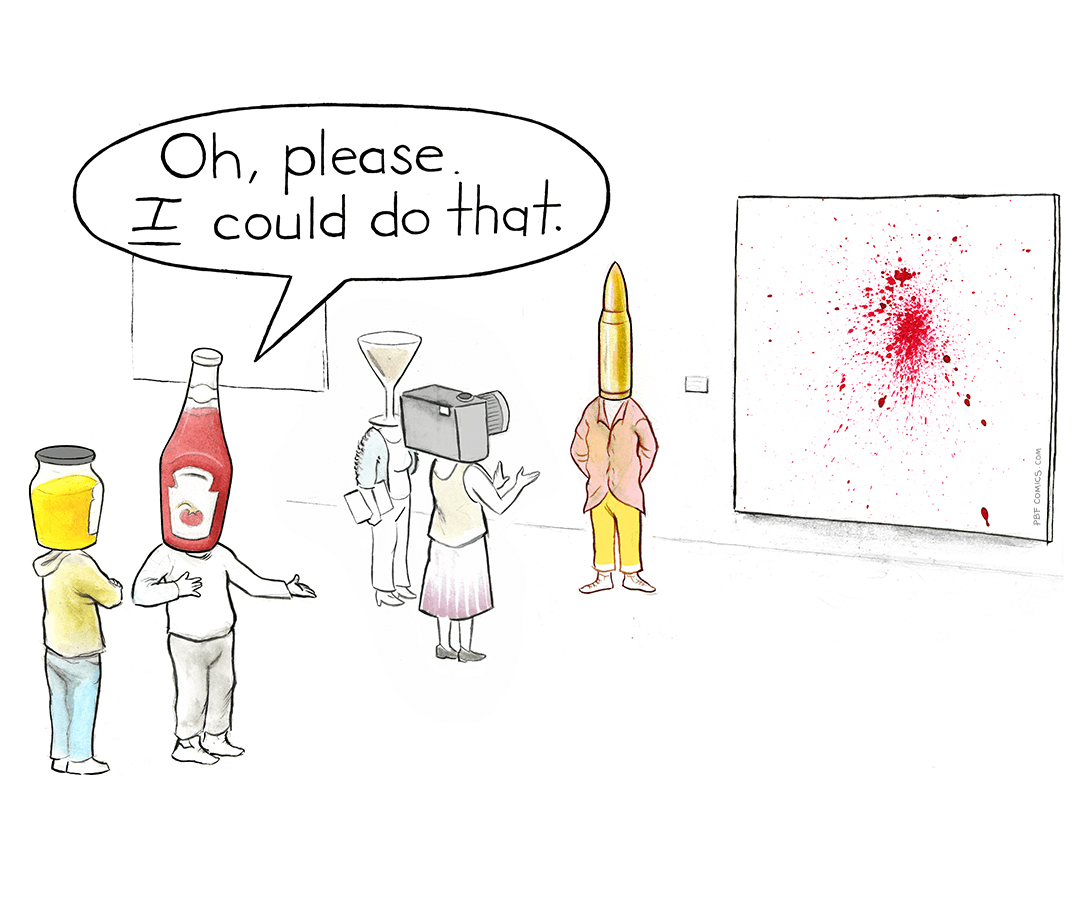

(If you want, tell me the kind of dystopia you’re trying to create and I can help build it for you.)

And so I have tried over the last few years to thread a somewhat reasonable middle ground in my coverage on AI. Instead of immediately throwing up my hands and saying, “this shit sucks ass.” I’ve continually tried to find some kind of use for it. I’ve ordered groceries with it, tried to use it to troubleshoot technical problems, to design a better business plan for Garbage Day, used it as a personal coach, as a therapist, a video editor. And I can confidently say it has failed every time. And I’ve come to realize that it fails in the exact same way every single time. I’m going to call this the AI imagination gap.

I don’t think I’m more creative than the average person, but I can honestly say I’ve been making something basically my entire life. As a teenager I wrote short stories, played in bands, drew cartoons for the school paper, and did improv (#millennial), and I’ve been lucky enough to be able to put those interests to use either personally or professionally in some way ever since. If I’m not writing, I’m working on music or standup, if I’m not doing those things, I’m podcasting (it counts!), or cooking, or some other weird little hobby I’m noodling on. Jack of all trades, etc.

Every time I’ve tried to involve AI in one of my creative pursuits it has spit out the exact same level of meh. No matter the model, no matter the project, it simply cannot match what I have in my head. Which would be fine, but it absolutely cannot match the fun of making the imperfect version of that idea that I may have made on my own either. Instead, it simulates the act of brainstorming or creative exploration, turning it into predatory pay-for-play process that, every single time, spits out deeply mediocre garbage. It charges you for the thrill of feeling like you’re building or making something and, just like a casino — or online dating, or pornography, or TikTok — cares more about that monetizable loop of engagement, of progress, than it does the finished product. What I’m saying is generative AI is a deeply expensive edging machine, but for your life.

My breaking point with AI started a few months ago, after I spent a week with ChatGPT trying to build a synth setup that it assured me over and over again was possible. Only on the third or fourth day of working through the problem did it suddenly admit that the core idea was never going to actually work. Which, from a business standpoint is fine for OpenAI, of course. It kept me talking to it for hours. And, similarly, last night, after another fruitless round of vibe coding an app with Claude, I kept pressing it over and over to think of a better solution to a problem I’m having. I knew, in my bones, that it was missing a more obvious, easier solution and after the fifth time I reframed the problem it actually got mad at me!

(You can’t be talking to me like that, Claude.)

If we are to assume that this imagination gap, this life edging, this progress simulator, is a feature and not a bug — and there’s no reason not to, this is how every platform makes money — then the “AI revolution” suddenly starts to feel much more insidious. It is not a revolution in computing, but a revolution in accepting lower standards. I had a similar moment of clarity, watching a panel at Bitcoin Miami in in 2022, where the speakers started waxing philosophically on what they either did or did not realize was a world run on permanent, automated debt slavery. In the same way, if AI succeeds, we will have to live in a world where the joy of making something has turned into something you have to pay for. And if it really succeeds, you won’t even care that what you’re using an AI to make is total dog shit. Most frightening of all, these AI companies already don’t care about how dangerous a world like this would be.

OpenAI head Sam Altman is having another one of his spats with Elon Musk this week. And responding to a post Musk made highlighting deaths related to ChatGPT-psychosis, Altman wrote, “Almost a billion people use it and some of them may be in very fragile mental states. We will continue to do our best to get this right.” Continuing in his cutest widdle tech CEO voice, “It is genuinely hard; we need to protect vulnerable users, while also making sure our guardrails still allow all of our users to benefit from our tools.”

It’s hard, guys. All OpenAI wants is to make a single piece of software that can swallow the entire internet, and devour the daily machinations of lives, and make us pay to interface with our souls, and worm its way into the lives of everyone on Earth. They can’t be blamed when it starts killing a few of its most vulnerable users! And they certainly can’t be blamed for not understanding that all of this is connected. Learning, creativity, self-discovery, pride in our accomplishments, that’s what makes human. And if we lose that — or worse, give up willingly — we lose everything.

Become a paying subscriber of Premium to get access to this post and other subscriber-only content.

Upgrade Translation missing: en.app.shared.conjuction.or Sign In

- Paywalled weekend issue

- Monthly Garbage Intelligence report

- Discord access

- Discounts on merch and events