Before we get going — please enjoy my speech from Web Summit, Why Are All Tech Products Now Shit? I didn’t write the title.

What if what we're seeing today isn't a glimpse of the future, but the new terms of the present? What if artificial intelligence isn't actually capable of doing much more than what we're seeing today, and what if there's no clear timeline when it'll be able to do more? What if this entire hype cycle has been built, goosed by a compliant media ready and willing to take career-embellishers at their word?

Me, in March 2024.

I have been warning you for the best part of a year that generative AI has no killer apps and had no way of justifying its valuations (February), that generative AI had already peaked (March), and I have pleaded with people to consider an eventuality where the jump from GPT-4 to GPT-5 was not significant, in part due to a lack of training data (April).

I shared concerns in July that the transformer-based-architecture underpinning generative AI was a dead end, and that there were few ways we'd progress past the products we'd already seen, in part due to both the limits of training data and the limits of models that use said training data. In August, I summarized the Pale Horses of the AI Apocalypse — events, many that have since come to pass, that would signify that the end is indeed nigh — and again added that GPT-5 would likely "not change the game enough to matter, let alone [add] a new architecture to build future (and more capable) models on."

Throughout these pieces I have repeatedly made the point that — separate to any lack of a core value proposition, training data drought, or unsustainable economics — generative AI is a dead end due to the limitations of probabilistic models that hallucinate, where they authoritatively state things that aren't true. The hallucination problem is one that is nowhere closer to being solved — and, at least with the current technology — may never go away, and it makes it a non-starter for a great many business tasks, where you need a high level of reliability.

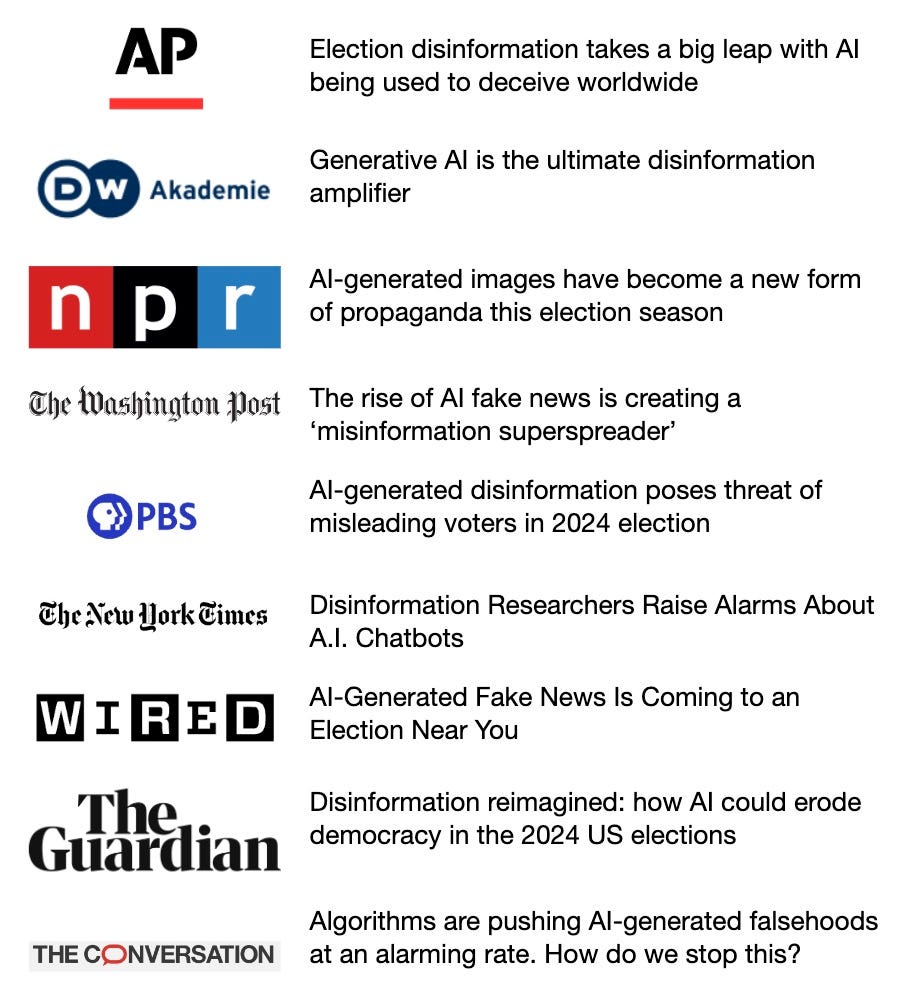

I have — since March — expressed great dismay about the credulousness of the media in their acceptance of the "inevitable" ways in which generative AI will change society, despite a lack of any truly meaningful product that might justify an environmentally-destructive industry led by a company that burns more than $5 billion a year and big tech firms spending $200 billion on data centers for products that people don't want.

The reason I'm repeating myself is that it's important to note how obvious the problems with generative AI have been, and for how long.

And you're going to need context for everything I'm about to throw at you.

Sidebar: To explain exactly what happened here, it's worth going over how these models work and are trained. I’ll keep it simple as it's a reminder.

A transformer-based generative AI model such as GPT — the technology behind ChatGPT — generates answers using "inference," which means it draws conclusions based off of its "training," which requires feeding it masses of training data (mostly text and images scraped from the internet). Both of these processes require you to use high-end GPUs (graphics processing units), and lots of them.

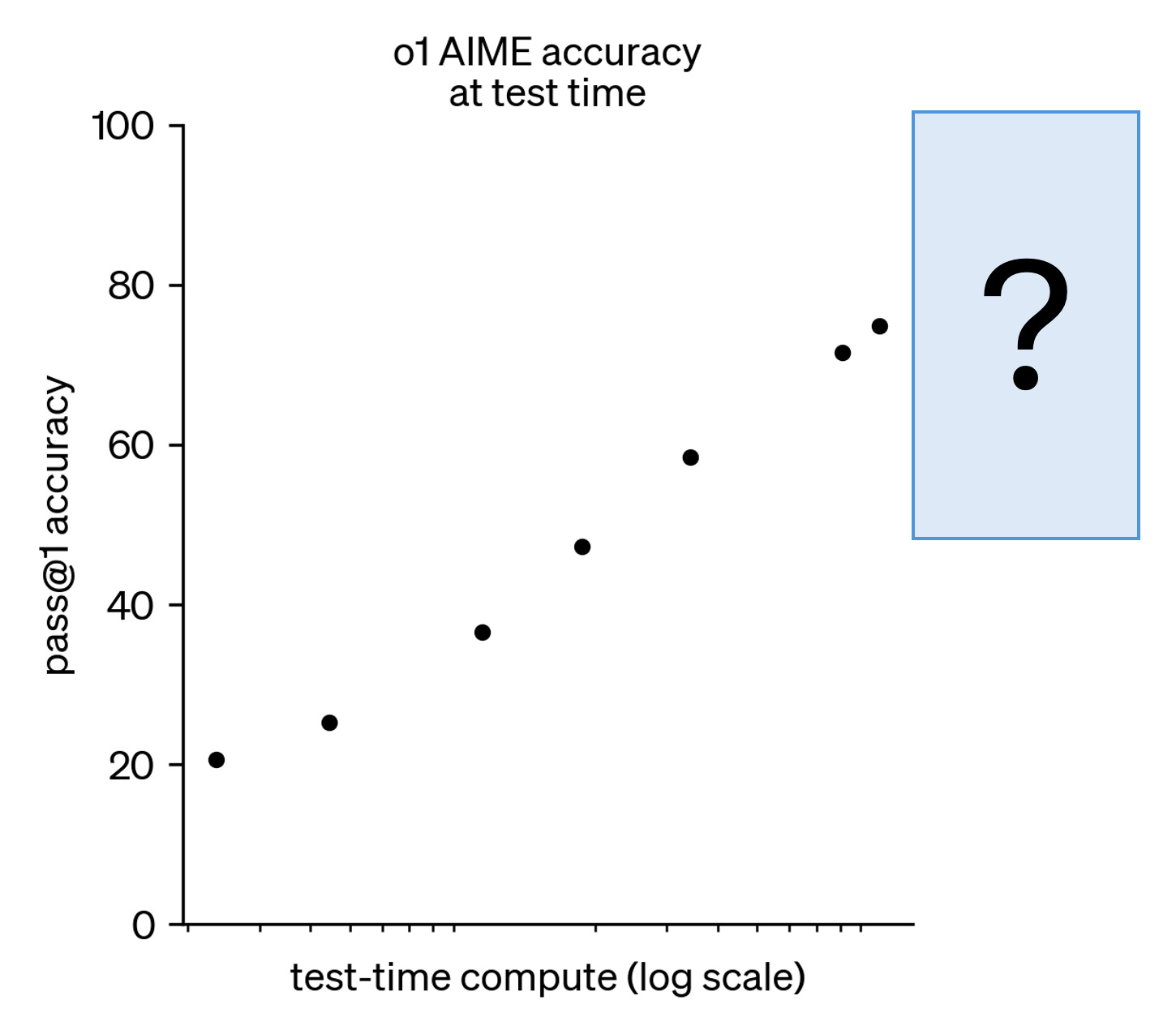

The theory was (is?) that the more training data and compute you throw at these models, the better they get. I have hypothesized for a while they'd have diminishing returns — both from running out of training data and based on the limitations of transformer-based models.

And there, as they say, is the rub.

A few weeks ago, Bloomberg reported that OpenAI, Google, and Anthropic are struggling to build more advanced AI, and that OpenAI's "Orion" model — otherwise known as GPT-5 — "did not hit the company's desired performance," and that "Orion is so far not considered to be as big a step up" as it was from GPT-3.5 to GPT-4, its current model. You'll be shocked to hear the reason is that because "it’s become increasingly difficult to find new, untapped sources of high-quality, human-made training data that can be used to build more advanced AI systems," something I said would happen in March, while also adding that the "AGI bubble is bursting a little bit," something I said more forcefully in July.

I also want to stop and stare daggers at one particular point:

These issues challenge the gospel that has taken hold in Silicon Valley in recent years, particularly since OpenAI released ChatGPT two years ago. Much of the tech industry has bet on so-called scaling laws that say more computing power, data and larger models will inevitably pave the way for greater leaps forward in the power of AI.

The only people taking this as "gospel" have been members of the media unwilling to ask the tough questions and AI founders that don't know what the fuck they're talking about (or that intend to mislead). Generative AI's products have effectively been trapped in amber for over a year. There have been no meaningful, industry-defining products, because, as economist Daron Acemoglu said back in May, "more powerful" models do not unlock new features, or really change the experience, nor what you can build with transformer-based models. Or, put another way, a slightly better white elephant is still a white elephant.

Despite the billions of dollars burned and thousands of glossy headlines, it's difficult to point to any truly important generative-AI-powered product. Even Apple Intelligence, the only thing that Apple really had to add to the latest iPhone, is utterly dull, and largely based on on-device models.

Yes, there are people that use ChatGPT — 200 million of them a week, allegedly, losing the company money with every prompt — but there is little to suggest that there's widespread adoption of actual generative AI software. The Information reported in September that between 0.1% and 1% of the 440 million of Microsoft's business customers were paying for its AI-powered Copilot, and in late October, Microsoft claimed that "AI is on pace to be a $10 billion-a-year business," which sounds good until you consider a few things:

- Microsoft has no "AI business" unit, which means that this annual "$10 billion" (or $2.5 billion a quarter) revenue figure is split across providing cloud compute services on Azure, selling Copilot to dumb people with Microsoft 365 subscriptions, selling Github Copilot, and basically anything else with "AI" on it. Microsoft is cherry-picking a number based on non-specific criteria and claiming it's a big deal, when it's actually pretty pathetic considering its capital expenditures will likely hit over $60 billion in 2024.

- Note the word "revenue," not "profit." How much is Microsoft spending to make $10 billion a year? OpenAI currently spends $2.35 to make $1, and Microsoft CFO Amy Hood said that OpenAI would cut into Microsoft's profits this October, losing it a remarkable $1.5 billion, "mainly because of an expected loss from OpenAI" according to CNBC. A year ago, the Wall Street Journal reported in October 2023 that Microsoft was losing an average of $20 per-user-per month on GitHub Copilot — a product with over a million users. If true, this suggests losses of at least $200 million (based on documents I've reviewed, it has 1.8 million users as of a month ago, but I went on the lower end) a year.

- Microsoft has still yet to break out exactly how much generative AI is increasing revenue in specific business units. Generally, if a company is doing well at something, they take great pains to make that clear. Instead, Microsoft chose in August to "revamp" its reporting structure to "give better visibility into cloud consumption revenue," which is something you do if you, say, anticipate you're going to have your worst day of trading in years after your next earnings as Microsoft did in October.

I must be clear that every single one of these investments and products has been hyped with the whisper that they would get exponentially better over time, and that eventually the $200 billion in capital expenditures would spit out remarkable productivity improvements and fascinating new products that consumers and enterprises would buy in droves. Instead, big tech has found itself peddling increasingly-more-expensive iterations of near-identical Large Language Models — a direct result of them all having to use the same training data, which it’s now running out of.

The other assumption — those so-called scaling laws — has been that by simply building bigger data centers with more GPUs (the expensive, power-hungry graphics processing units used to both run and train these models) and throwing as much training data at them as possible, they'd simply start sprouting new capabilities, despite there being little proof that they'd do so. Microsoft, Meta, Amazon, and Google have all burned billions on the assumption that doing so would create something — be it a human-level "artificial general intelligence" or, I dunno, a product that would justify the costs — and it's become painfully obvious that it isn't going to work.

As we speak, outlets are already desperate to try and prove that this isn't a problem. The Information, in a similar story to Bloomberg's, attempted to put lipstick on the pig of generative AI, framing the lack of meaningful progress with GPT-5 as fine, because OpenAI can combine its GPT-5 Model with its o-1 "reasoning" model, which will then do something of some sort, such as "write a lot more very difficult code" according to OpenAI CEO and career liar Sam Altman, who intimated that GPT-5 may function like a "virtual brain" in May.

Chief Valley Cheerleader Casey Newton wrote on Platformer last week that diminishing returns in training models "may not matter as much as you would guess," with his evidence being that Anthropic, who he claims "has not been prone to hyperbole," do not think that scaling laws are ending. To be clear, in a 14,000 op-ed that Newton wrote two pieces about, Anthropic CEO Dario Amodei said that "AI-accelerated neuroscience is likely to vastly improve treatments for, or even cure, most mental illness," the kind of hyperbole that should have you tarred and feathered in public.

So, let me summarize:

- The main technology behind the entire "artificial intelligence" boom is generative AI — transformer-based models like OpenAI's GPT-4 (and soon GPT-5) — and said technology has peaked, with diminishing returns from the only ways of making them "better" (feeding them training data and throwing tons of compute at them) suggesting that what we may have, as I've said before, reached Peak AI.

- Generative AI is incredibly unprofitable. OpenAI, the biggest player in the industry, is on course to lose more than $5 billion this year, with competitor Anthropic (which also makes its own transformer-based model, Claude) on course to lose more than $2.7 billion this year.

- Every single big tech company has thrown billions — as much as $75 billion in Amazon's case in 2024 alone — at building the data centers and acquiring the GPUs to populate said data centers specifically so they can train their models or other companies' models, or serve customers that would integrate generative AI into their businesses, something that does not appear to be happening at scale.

- Worse still, many of the companies integrating generative AI do so by connecting to models made by either OpenAI or Anthropic, both of whom are running unprofitable businesses, and likely charging nowhere near enough to cover their costs. As I wrote in the Subprime AI Crisis in September, in the event that these companies start charging what they actually need to, I hypothesize it will multiply the costs of their customers to the point that they can't afford to run their businesses — or, at the very least, will have to remove or scale back generative AI functionality in their products.

The entire tech industry has become oriented around a dead-end technology that requires burning billions of dollars to provide inessential products that cost them more money to serve than anybody would ever pay. Their big strategy was to throw even more money at the problem until one of these transformer-based models created a new, more useful product — despite the fact that every iteration of GPT and other models has been, well, iterative. There has never been any proof (other than benchmarks that are increasingly easier to game) that GPT or other models would become conscious, nor that these models would do more than they do today, or three months ago, or even a year ago.

Yet things can, believe it or not, get worse.

The AI boom helped the S&P 500 hit record high levels in 2024, largely thanks to chip giant NVIDIA, a company that makes both the GPUs necessary to train and run generative AI models and the software architecture behind them. Part of NVIDIA's remarkable growth has been its ability to capitalize on the CUDA architecture — the software layer that lets you do complex computing with GPUs, rather than simply use them to render video games in increasingly higher resolution — and, of course, continually create new GPUs to sell for tens of thousands of dollars to tech companies that want to burn billions of dollars on generative AI, leading the company's stock to pop more than 179% over the last year.

Back in May, NVIDIA CEO and professional carnival barker Jensen Huang said that the company was now "on a one-year rhythm" in AI GPU production, with its latest "Blackwell" GPUs (specifically the B100, B200 and GB200 models used for generative AI) supposedly due at the end of 2024, but are now delayed until at least March 2025.Before we go any further, it's worth noting that when I say "GPU," I don't mean the one you'd find in a gaming PC, but a much larger chip put in a specialized server with multiple other GPUs, all integrated with specialized casing, cooling, and networking infrastructure. In simple terms, the things necessary to make sure all these chips work together efficiently, and also stop them from overheating, because they get extremely hot and are running at full speed, all the time.

The initial delay of the new Blackwell chips was caused by a (now-fixed) design flaw in production, but as I've suggested above, the problem isn't just creating the chips — it's making sure they actually work, at scale, for the jobs they're bought for.

But what if that, too, wasn't possible?

A few days ago, The Information reported that NVIDIA is grappling with the oldest problem in computing — how to cool the fucking things. According to the report, NVIDIA has been asking suppliers to change the design of its 3,000-pound, 75-GPU server racks "several times" to overcome overheating problems, which The Information calls "the most complicated design NVIDIA had ever come up with." According to the report, a few months after revealing the racks, engineers found that they...didn't work properly, even with Nvidia’s smaller 36-chip racks, and have been scrambling to fix it ever since.

While one can dazzle investors with buzzwords and charts, the laws of physics are a far harsher mistress, and if NVIDIA is struggling mere months before the first installations are to begin, it's unclear how it practically launches this generation of chips, let alone continues its yearly cadence. The Information reports that these changes have been made late in the production process, which is scaring customers that desperately need them so that their models can continue to do something they'll work out later. To quote The Information:

Two executives at large cloud providers that have ordered the new chips said they are concerned that such last-minute difficulties might push back the timeline for when they can get their GPU clusters up and running next year.

The fact that NVIDIA is having such significant difficulties with thermal performance is very, very bad. These chips are incredibly expensive — as much as $70,000 a piece — and will be running, as I've mentioned, at full speed, generating an incredible amount of heat that must be dissipated, while sat next to anywhere from 35 to 71 other chips, which will in turn be densely packed so that you can cram more servers into a data center. New, more powerful chips require entirely new methods to rack-mount, operate and cool them, and all of these parts must operate in sync, as overheating GPUs will die. While these units are big, some of their internal components are microscopic in size, and unless properly cooled, their circuits will start to crumble when roasted by a guy typing "Garfield with Gun" into ChatGPT.

Remember, Blackwell is supposed to represent a major leap forward in performance. If NVIDIA doesn’t solve its cooling problem — and solve it well — its customers will undoubtedly encounter thermal throttling, where the chip reduces speed in order to avoid causing permanent damage. It could eliminate any performance gains obtained from the new architecture and new manufacturing process, despite costing much, much more than its predecessor.

NVIDIA's problem isn't just bringing these thermal performance issues under control, but both keeping them under control and being able to educate their customers on how to do so. NVIDIA has, according to The Information, repeatedly tried to influence its customers' server integrations to follow its designs because it thinks it will "lead to better performance," but in this case, one has to worry if NVIDIA's Blackwell chips can be reliably cooled.

While NVIDIA might be able to fix this problem in isolation within its racks, it remains to be seen how this works at scale as they ship and integrate hundreds of thousands of Blackwell GPUs starting in the front half of 2025.

Things also get a little worse when you realize how these chips are being installed — in giant “supercomputer” data centers where tens of thousands, or as many as a hundred thousand in the case of Elon Musk’s “colossus” data center — of GPUs run in concert to power generative AI models. The Wall Street Journal reported a few weeks ago that building these vast data centers creates entirely new engineering challenges, with one expert saying that big tech companies could be using as much as half of their capital expenditures on replacing parts that have broken down, in large part because these clusters are running their GPUs at full speed, at all times.

Remember, the capital expenditures on generative AI and the associated infrastructure have gone over $200 billion in the last year. If half of that’s dedicated to replacing broken gear, what happens when there’s no path to profitability?

In any case, NVIDIA doesn’t care. It’s already made billions of dollars selling Blackwell GPUs — they're sold out for a year, after all — and will continue to do so for now, but any manufacturing or cooling issues will likely be costly.

And even then, at some point somebody has to ask the question: why do we need all these GPUs if we've reached peak AI? Despite the remarkable "power" of these chips, NVIDIA's entire enterprise GPU business model centers around the idea that throwing more power at these problems will finally create some solutions.

What if that isn't the case?

The tech industry is over-leveraged, having doubled, tripled, quadrupled down on generative AI — a technology that doesn't do much more than it did a few months ago and won't do much more than it can do now. Every single big tech company has piled tens of billions of dollars into building out massive data centers with the intent of "capturing AI demand," yet never seemed to think whether they were actually building things that people wanted, or would pay for, or would somehow make the company money.

While some have claimed that "agents are the next frontier," the reality is that agents may be the last generative AI product — multiple Large Language Models and integrations bouncing off of each other in an attempt to simulate what a human might do at a cost that won't be sustainable for the majority of businesses. While Anthropic's demo of its model allegedly controlling a few browser windows with a prompt might have seemed impressive to credulous people like Casey Newton, these were controlled demos which Anthropic added were "slow" and "made lots of mistakes." Hey, almost like it's hallucinating! I sure hope they fix that totally unfixable problem.

Even if it does, Anthropic has now successfully replaced...an entry-level data worker position at an indeterminate and likely unprofitable price. And in many organizations, those jobs had already been outsourced, or automated, or staffed with cheaper contractors.

The obscenity of this mass delusion is nauseating — a monolith to bad decision-making and the herd mentality of tech's most powerful people, as well as an outright attempt to manipulate the media into believing something was possible that wasn't. And the media bought it, hook, line, and sinker.

Hundreds of billions of dollars have been wasted building giant data centers to crunch numbers for software that has no real product-market fit, all while trying to hammer it into various shapes to make it pretend that it's alive, conscious, or even a useful product.

There is no path, from what I can see, to turn generative AI and its associated products into anything resembling sustainable businesses, and the only path that big tech appeared to have was to throw as much money, power, and data at the problem as possible, an avenue that appears to be another dead end.

And worse still, nothing has really come out of this movement. I've used a handful of AI products that I've found useful — an AI powered journal, for example — but these are not the products that one associates with "revolutions," but useful tools that would have been a welcome surprise if they didn't require burning billions of dollars, blowing past emissions targets and stealing the creative works of millions of people to train them.

I truly don't know what happens next, but I'll walk you through what I'm thinking.

If we're truly at the diminishing returns stage of transformer-based models, it will be extremely difficult to justify buying further iterations of NVIDIA GPUs past Blackwell. The entire generative AI movement lives and dies by the idea that more compute power and more training data makes these things better, and if that's no longer the case, there's little reason to keep buying bigger and better. After all, what's the point?

Even now, what exactly happens when Microsoft or Google has racks-worth of Blackwell GPUs? The models aren't going to get better.

This also makes the lives of OpenAI and Anthropic that much more difficult. Sam Altman has grown rich and powerful lying about how GPT will somehow lead to AGI, but at this point, what exactly is OpenAI meant to do? The only way it’s ever been able to develop new models is by throwing masses of compute and training data at the problem, and its only other choice is to start stapling its reasoning model onto its main Large Language Model, at which point something happens, something so good that literally nobody working for OpenAI or in the media appears to be able to tell you what it is.

Putting that aside, OpenAI is also a terrible business that has to burn $5 billion to make $3.4 billion, with no proof that it’s capable of bringing down costs. The constant refrain I hear from VCs and AI fantasists is that "chips will bring down the cost of inference," yet I don't see any proof of that happening, nor do I think it'll happen quickly enough for these companies to turn things around.

And you can feel the desperation, too. OpenAI is reportedly looking at ads as a means to narrow the gap between its revenues and losses. As I pointed out in Burst Damage, introducing an advertising revenue stream would require significant upfront investment, both in terms of technology and talent. OpenAI would need a way to target ads, and a team to sell advertising — or, instead, use a third-party ad network that would take a significant bite out of its revenue.

It’s unclear how much OpenAI could charge advertisers, or what percentage of its reported 200 million weekly users have an ad-blocker installed. Or, for that matter, whether ads would provide a perverse incentive for OpenAI to enshittify an already unreliable product.

Facebook and Google — as I’ve previously noted — have made their products manifestly worse in order to increase the amount of time people spend on their sites, and thus, the number of ads they see. In the case of Facebook, it buried your newsfeed under a deluge of AI-generated sludge and “recommended content.” Google, meanwhile, has progressively degraded the quality of its search results in order to increase the volume of queries it received as a means of making sure users saw more ads.

OpenAI could, just as easily, fall into the same temptation. Most people who use ChatGPT are trying to accomplish a specific task — like writing a term paper, or researching a topic, or whatever — and then they leave. And so, the amount of ads they’d conceivably see each will undoubtedly be comparatively low compared to a social network or search engine. Would OpenAI try to get users to stick around longer — to write more prompts — by crippling the performance of its models?

Even if OpenAI listens to its better angels, the reality still stands: ads won’t dam the rising tide of red ink that promises to eventually drown the company.

This is a truly dismal situation where the only options are to stop now, or continue burning money until the heat gets too much. It cost $100 million to train GPT-4o, and Anthropic CEO Dario Amodei estimated a few months ago that training future models will cost $1 billion to $10 billion, with one researcher claiming that training OpenAI's GPT-5 will cost around $1 billion.

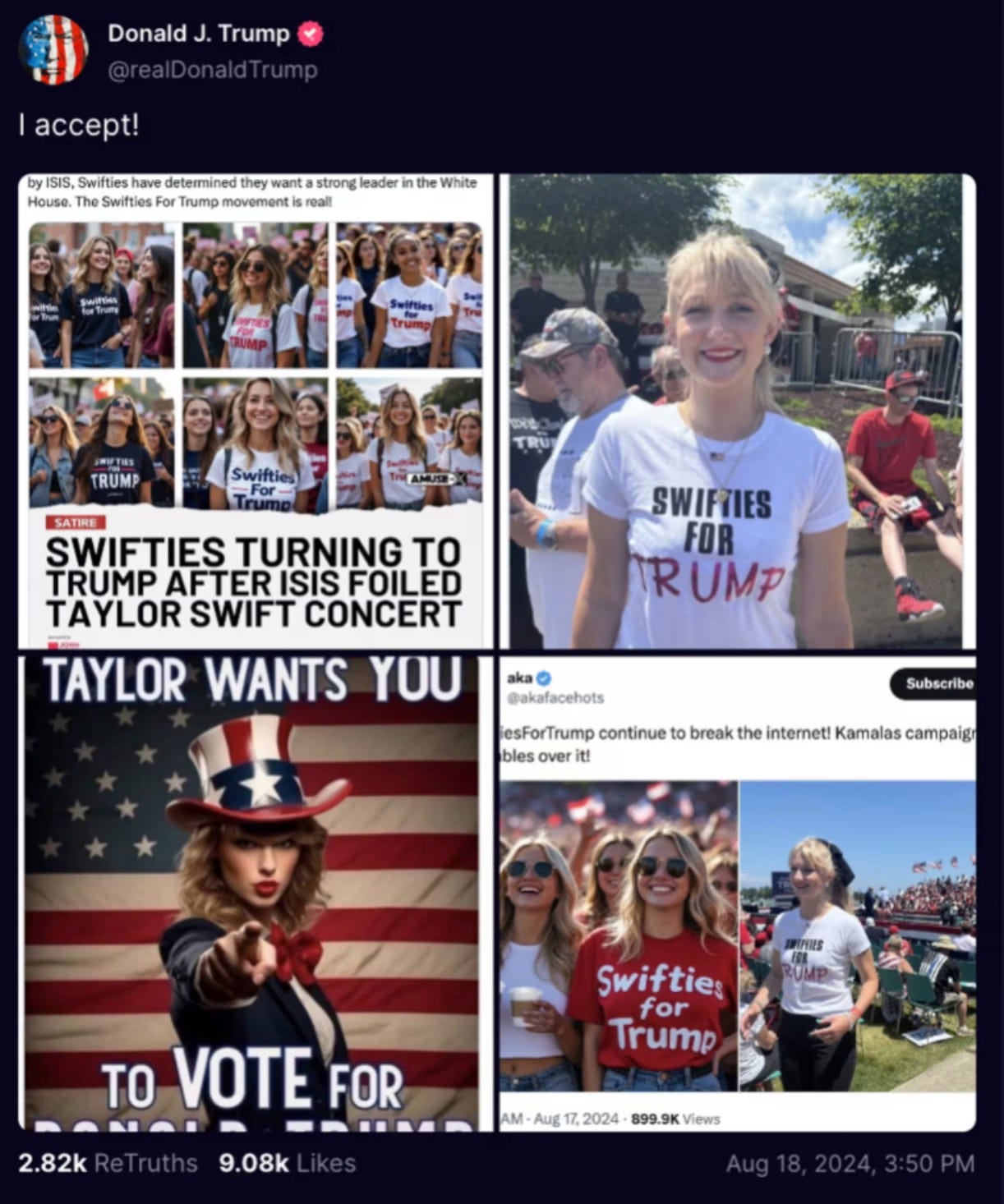

And that’s before mentioning any, to quote a Rumsfeldism, “unknown unknowns.” Trump’s election, at the risk of sounding like a cliché, changes everything and in ways we don’t yet fully understand. According to the Wall Street Journal, Musk has successfully ingratiated himself with Trump, thanks to his early and full-throated support of his campaign. He’s now reportedly living in Mar a Lago, sitting on calls with world leaders, and whispering in Trump’s ear as he builds his cabinet.

And, as The Journal claims, his enemies fear that he could use his position of influence to harm them or their businesses — chiefly Sam Altman, who is “persona non grata” in Musk’s world, largely due to the new for-profit direction of OpenAI. While it’s likely that these companies will fail due to inevitable organic realities (like running out of money, or not having a product that generates a profit), Musk’s enemies must now contend with a new enemy — one with the full backing of the Federal government, and that neither forgives nor forgets.

And, crucially, one that’s not afraid to bend ethical or moral laws to further his own interests — or to inflict pain on those perceived as having slighted him.

Even if Musk doesn’t use his newfound political might to hurt Altman and OpenAI, he could still pursue the company as a private citizen. Last Friday, he filed an injunction requesting a halt to OpenAI’s transformation from an ostensible non-profit to a for-profit business. Even if he ultimately fails, should Musk manage to drag the process out, or delay it temporarily, it could strike a terminal blow for OpenAI.

That’s because in its most recent fundraise, OpenAI agreed that it would convert its recent $6.6bn equity investment into high-interest debt, should it fail to successfully convert into a for-profit business within a two-year period. This was a tight deadline to begin with, and it can’t afford any delays. The interest payments on that debt would massively increase its cash burn, and it would undoubtedly find it hard to obtain further outside investment.

Outside of a miracle, we are about to enter an era of desperation in the generative AI space. We're two years in, and we have no killer apps — no industry-defining products — other than ChatGPT, a product that burns billions of dollars and nobody can really describe. Neither Microsoft, nor Meta, nor Google or Amazon seem to be able to come up with a profitable use case, let alone one their users actually like, nor have any of the people that have raised billions of dollars in venture capital for anything with "AI" taped to the side — and investor interest in AI is cooling.

It's unclear how much further this farce continues, if only because it isn't obvious what it is that anybody gets by investing in future rounds in OpenAI, Anthropic, or any other generative AI company. At some point they must make money, and the entire dream has been built around the idea that all of these GPUs and all of this money would eventually spit out something revolutionary.

Yet what we have is clunky, ugly, messy, larcenous, environmentally-destructive and mediocre. Generative AI was a reckless pursuit, one that shows a total lack of creativity and sense in the minds of big tech and venture capital, one where there was never anything really impressive other than the amount of money it could burn and the amount of times Sam Altman could say something stupid and get quoted for it.

I'll be honest with you, I have no idea what happens here. The future was always one that demanded that big tech spent more to make even bigger models that would at some point become useful, and that isn't happening. In pursuit of doing so, big tech invested hundreds of billions of dollars into infrastructure specifically to follow one goal, and put AI front and center at their businesses, claiming it was the future without ever considering what they'd do if it wasn't.

The revenue isn't coming. The products aren't coming. "Orion," OpenAI's next model, will underwhelm, as will its competitors' models, and at some point somebody is going to blink in one of the hyperscalers, and the AI era will be over. Almost every single generative AI company that you’ve heard of is deeply unprofitable, and there are few innovations coming to save them from the atrophy of the foundation models.

I feel sad and exhausted as I write this, drained as I look at the many times I’ve tried to warn people, frustrated at the many members of the media that failed to push back against the overpromises and outright lies of people like Sam Altman, and full of dread as I consider the economic ramifications of this industry collapsing. Once the AI bubble pops, there are no other hyper-growth markets left, which will in turn lead to a bloodbath in big tech stocks as they realize that they’re out of big ideas to convince the street that they’re going to grow forever.

There are some that will boast about “being right” here, and yes, there is some satisfaction in being so. Nevertheless, knowing that the result of this bubble bursting will be massive layoffs, a dearth in venture capital funding, and a much more fragile tech ecosystem.

I’ll end with a quote from Bubble Trouble, a piece I wrote in April:

How do you solve all of these incredibly difficult problems? What does OpenAI or Anthropic do when they run out of data, and synthetic data doesn't fill the gap, or worse, massively degrades the quality of their output? What does Sam Altman do if GPT-5 — like GPT-4 — doesn't significantly improve its performance and he can't find enough compute to take the next step? What do OpenAI and Anthropic do when they realize they will likely never turn a profit? What does Microsoft, or Amazon, or Google do if demand never really takes off, and they're left with billions of dollars of underutilized data centers? What does Nvidia do if the demand for its chips drops off a cliff as a result?

I don't know why more people aren't screaming from the rooftops about how unsustainable the AI boom is, and the impossibility of some of the challenges it faces. There is no way to create enough data to train these models, and little that we've seen so far suggests that generative AI will make anybody but Nvidia money. We're reaching the point where physics — things like heat and electricity — are getting in the way of progressing much further, and it's hard to stomach investing more considering where we're at right now is, once you cut through the noise, fairly god damn mediocre. There is no iPhone moment coming, I'm afraid.

I was right then and I’m right now. Generative AI isn’t a revolution, it’s an evolution of a tech industry overtaken by growth-hungry management consultant types that neither know the problems that real people face nor how to fix them. It’s a sickening waste, a monument to the corrupting force of growth, and a sign that the people in power no longer work for you, the customer, but for the venture capitalists and the markets.

I also want to be clear that none of these companies ever had a plan. They believed that if they threw enough GPUs together they would turn generative AI – probabilistic models for generating stuff — into some sort of sentient computer. It’s much easier, and more comfortable, to look at the world as a series of conspiracies and grand strategies, and far scarier to see it for what it is — extremely rich and powerful people that are willing to bet insanely large amounts of money on what amounts to a few PDFs and their gut.

This is not big tech’s big plan to excuse building more data centers — it’s the death throes of twenty years of growth-at-all-costs thinking, because throwing a bunch of money at more servers and more engineers always seemed to create more growth. In practice, this means that the people in charge and the strategies they employ are borne not of an interest in improving the lives of their customers, but in increasing revenue growth, which means the products they create aren’t really about solving any problem other than “what will make somebody give me more money,” which doesn’t necessarily mean “provide them with a service.”

Generative AI is the perfect monster of the Rot Economy — a technology that lacks any real purpose sold as if it could do literally anything, one without a real business model or killer app, proliferated because big tech no longer innovates, but rather clones and monopolizes. Yes, this much money can be this stupid, and yes, they will burn billions in pursuit of a non-specific dream that involves charging you money and trapping you in their ecosystem.

I’m not trying to be a doomsayer, just like I wasn’t trying to be one in March. I believe all of this is going nowhere, and that at some point Google, Microsoft, or Meta is going to blink and pull back on their capital expenditures. And before then, you’re going to get a lot of desperate stories about how “AI gains can be found outside of training new models” to try and keep the party going, despite reality flicking the lights on and off and threatening to call the police.

I fear for the future for many reasons, but I always have hope, because I believe that there are still good people in the tech industry and that customers are seeing the light. Bluesky feels different — growing rapidly, competing with both Threads and Twitter, all while selling an honest product and an open protocol.

There are other ideas for the future that aren’t borne of the scuzzy mindset of billionaire shitheels like Sundar Pichai and Sam Altman, and they can — and will — grow out of the ruins created by these kleptocrats.