Think About Supporting Garbage Day!

It’s $5 a month or $45 a year and you get Discord access, two additional weekly issues, and monthly trend reports. What a bargain! Hit the button below to find out more.

Maduro Was Offered Up To The Algorithm

Over the weekend, the Trump administration carried out Operation Absolute Resolve (groan), capturing — or, rather, kidnapping — Venezuelan dictator Nicolás Maduro and his wife in the middle of the night. The arrest is the clearest example yet of how thoroughly President Donald Trump and his cronies have transformed the machinery of American politics.

We’ve spent last year covering all the ways the White House has embedded itself inside the feedback loop of online engagement, making flashy video edits of migrant arrests, deporting X users doxxed by far-right trolls, forcing the country to mourn the death of their favorite influencer, the list goes on and on. As blogger Cooper Lund recently wrote, “They are doing real, heinous things to facilitate the creation of content, and we must be clear about that, but it is always in service of the creation of the content and not durable policy.”

And it feels silly to say this — after years of Trumpian madness — but there is seemingly no limit to how far they will go to feed the algorithm. No limit to their craven desire to dominate the attention economy. Per a New York Times report this weekend, Trump finally decided to capture Maduro because he wouldn’t stop posting clips of himself dancing. Sure why not. So we abducted him from his bed, photographed him aboard the USS Iwo Jima, and paraded him through the streets of New York City. Compare that to the 2006 execution of Saddam Hussein, where a single grainy cell phone video captured the moment of his death, was uploaded to YouTube, and then, for the most part, immediately removed. If this weekend had taken a darker turn, one wonders if the footage would have been directly uploaded on Trump’s Truth Social account.

According to official photos that were released by the White House, figures like CIA Director John Ratcliffe and Secretary of War (groan) Pete Hegseth watched the raid from a command center that had a big screen showing X.com search results for “Venezuela” on it. Deeply cringe? Of course. Everything Hegseth does reeks of profound desperation. His whole life feels like watching someone pee their pants in public.

(Photo by Molly Riley/The White House via Getty Images)

But it also means I’m being pretty literal when I say we all watched Maduro’s arrest play out on social media platforms like X, Bluesky, and TikTok, where, predictably, memes and propaganda made it impossible to tell what was real and what wasn’t. Most of the footage you’ve seen of Venezuelans celebrating appears to be either old World Cup footage or shot in Miami. Though, it does seem like Ratcliffe laughed at Trump saying “6-7” during his press conference announcing Maduro’s arrest. And, apparently, many Venezuelans thought something big might happen because McDonald’s released a new flavor of McFlurry back in November. “In Venezuela, McFlurries are basically our prophet and our version of The Mothman,” one user on X wrote. “It's a sign that a catastrophe will occur.” Also, the Latin American fanbase of the anime Jujutsu Kaisen had a field day this weekend because Maduro was blindfolded after being taken into custody.

Meanwhile, Polymarket traders cashed in on a betting pool for “Maduro out by January 31, 2026.” One account made a $400,000 profit after betting around $30,000 the day before Maduro was apprehended. It could have been a member of the Trump administration, it may also have been an employee at The New York Times or The Washington Post. Which, according to Semafor, knew about Operation Absolute Resolve and chose not to report on it ahead of time. The other big winner of the Maduro arrest was Nike, who sold out of the tracksuit Maduro was photographed wearing.

Maduro’s arrest is connected to a new kind of politics I’ve spent the last year struggling to describe. A profoundly embarrassing collision of violent nationalism, illiterate social chatter, and memetic fascism that’s been spreading across the globe since the pandemic. We’ve seen hints for years now that the elites of the world are just as addicted to — and dependent on — the same social platforms that we are. Ignoring the near-constant public embarrassments of our shitposter ruling class that play out on platforms like X every day, our leaders are also digitally networking with each other behind closed doors. There’s Chatham House, the group chat that fried the minds of Silicon Valley’s most reactionary CEOs like Marc Andreessen, that’s been running since COVID started. Which is adjacent to the text message network of powerful men that convinced Elon Musk to buy Twitter in 2022. Last year, the Trump administration was caught planning out airstrikes in a Signal group. And days before the campaign in Venezuela, Republican operatives secretly teamed up with a far-right YouTuber to storm daycares in Minnesota. You take all of that and throw in last year’s Discord-based election in Nepal, the international white nationalist incel terror cells spreading across Telegram, and the fact Charlie Kirk’s killer allegedly carried out the attack for the members of his Discord channel and the picture couldn’t be clearer: Politics — and political violence — is now something performed, first and foremost, for an online audience. It almost doesn’t matter what happens irl if it makes noise online.

In fact, this weekend, Gustavo Petro, the president of Colombia, a country that might be next on Trump’s shit list, apparently learned of the Maduro extraction from a WhatsApp group for the video game Valorant, according to a screenshot he shared on X. (The guy running the Valorant group is now being inundated with messages.)

The closest description I’ve seen to world we’re now watching take shape is the idea of “the network state.” In 2013, investor and Bitcoin evangelist Balaji Srinivasan coined the term to describe his utopian vision of new cities and countries being formed by what he called “cloud formations,” or the “infinity of subcultures outside the mainstream” that find each other online. Srinivasan, like every other guy in Silicon Valley blinded by naked, unregulated greed, didn’t account for how stupid this would all be in practice, however. And it turns out the end result isn’t some exciting patchwork of new communities. Instead, it’s a handful poster regimes, rogue troll states, fueled by internet clout, where nothing matters unless it becomes content.

The following is a paid ad. If you’re interested in advertising, email me at ryan@garbageday.email and let’s talk. Thanks!

They can't scam you if they can't find you

The BBC caught scam call center workers on hidden cameras as they laughed at the people they were tricking.

One worker bragged about making $250k from victims. The disturbing truth? Scammers don’t pick phone numbers at random. They buy your data from brokers.

Once your data is out there, it’s not just calls. It’s phishing, impersonation, and identity theft. That’s why we recommend Incogni: They delete your info from the web, monitor and follow up automatically, and continue to erase data as new risks appear.

Try Incogni here and get 55% off your subscription with code DAYDEAL.

A Hundred Thousand

My big goal for 2025 was officially crossing 100,000 total readers. As we wound down for the year we were still a few hundred subscribers off and I had sort of accepted that it wasn’t going to happen. Then, magically, we rolled over on Christmas Eve. I am a little embarrassed to say that that number means a lot to me, but it does. Even in this era of massive follower counts and jaw-dropping traffic, it’s a big number!

I’ve written pieces that were read 100,000 times, made videos watched that many times, had tweets with that many retweets. But I’ve never hit that number of followers on any platform. Nor can I say I’m particularly proud of my most viral contributions to the web over the years. Most of them felt like I was playing some kind of video game. This feels different.

Garbage Day has, since day one, been a very personal labor of love. And to have so many people read it means the world to me. And I want to make sure we’re keeping our head on straight this year as we take bigger swings and expand our coverage. If you missed it before the break, we have a reader survey going right now. If you haven’t taken it, please do! We want to hear from you. Hit the green button below.

The Stranger Things Finale Was So Bad That Fans Are Convinced There’s Another Episode Surprise Dropping This Week

Adding to Garbage Day researcher Adam Bumas’ theory that 2025 was the year everyone decided to pretend the last 10 years never happened and turn the dial back to 2015, we’ve got another “The Johnlock Conspiracy” situation on our hands.

For the uninitiated, “Johnlock” was the ship name for John Watson and Sherlock Holmes from BBC’s Sherlock. “TJLC” was the fandom term for a very specific set of shippers who believed that the show’s January 2017 finale, where Holmes and Watson remained just friends, must be a smokescreen for the real finale where they ended up together.

This time around the ship is Byler, or fans of Stranger Things fans that think that Will Byers and Mike Wheeler should have similarly ended up together in the finale. And, again like in 2017, fans are so angry about this that they’ve convinced themselves that there’s a secret second finale dropping this week. They’re calling it #ConformityGate and they believe that the last episode was all an illusion created by the show’s villain, Vecna, and that a new episode released on January 7th will confirm this.

If you want to go down this rabbit hole — some of it is somewhat convincing, if only because of how god awful bad the finale was — you can watch this TikTok and this TikTok and this TikTok. And then go from there. Godspeed.

Grok Is Generating CSAM

—by Adam Bumas

In December, X’s image generation tools started allowing users to take any image and tell the site’s AI, Grok, to put them in a bikini or underwear, even if they’re minors or celebrities. It ignores any request to make these women and children naked, however, showing how easy it is to limit these tools’ capabilities. India has issued an ultimatum to X, but Elon Musk is too busy responding “🤣” to do anything about it. So far, the only apology we’ve seen is people telling Grok to generate one.

We don’t need to run down all the ways this is a nightmare for the human rights and personal safety of literally everyone with an online presence (especially women or anyone marginalized). Or how this is a complete dereliction of duty by anyone with the legal, technical and/or financial power to stop it. But there is the question of how and why this all started in the first place.

Our research shows a noticeable shift roughly around December 20th. We found multiple posts across X and Reddit from the days before, specifically noting that Grok ignored requests to put women in bikinis. But on December 22nd, we start to see the tide shifting, with more than one user successfully generating scantily clad images using Grok, then immediately demanding it make the clothes transparent. The change seems to have been implemented in advance of a new image editing functionality for Grok that launched on December 24th. This is part of a larger response across the AI industry to Google’s Nano Banana image generator, which WIRED reported is now seen as a standard-bearer for perving on women.

The AI arms race is playing out like many other recent tech revolutions on a much faster scale. If one model has a popular new feature, all the others have to copy it. Even if it’s “illegal sexual abuse material of women and children.”

This Hockey Podcast’s Heated Rivalry Recap Series Is Incredibly Good

@empty.netters Ilya is our consent KING 🥅 #hockey #heatedrivalry #tvshow

The Empty Netters hockey podcast has been doing a recap series for gay hockey romcom Heated Rivalry and it’s amazing. It’s basically the exact same tone and tenor of their usual hockey coverage, but applied to the show’s various romances. They also have the extremely correct take that Scott Hunter is the MVP of the show. Episode 5 was probably my favorite episode of TV from last year.

While we’re on the subject, if you haven’t explored Heated Rivalry director Jacob Tierney’s other work, please do yourself a favor and check out Letterkenny and Shoresy. They’re very, very different, but both feature Tierney’s signature visual style. Not sure anyone is directing music montages like him right now. He’s the master of the slow dramatic hockey zoom.

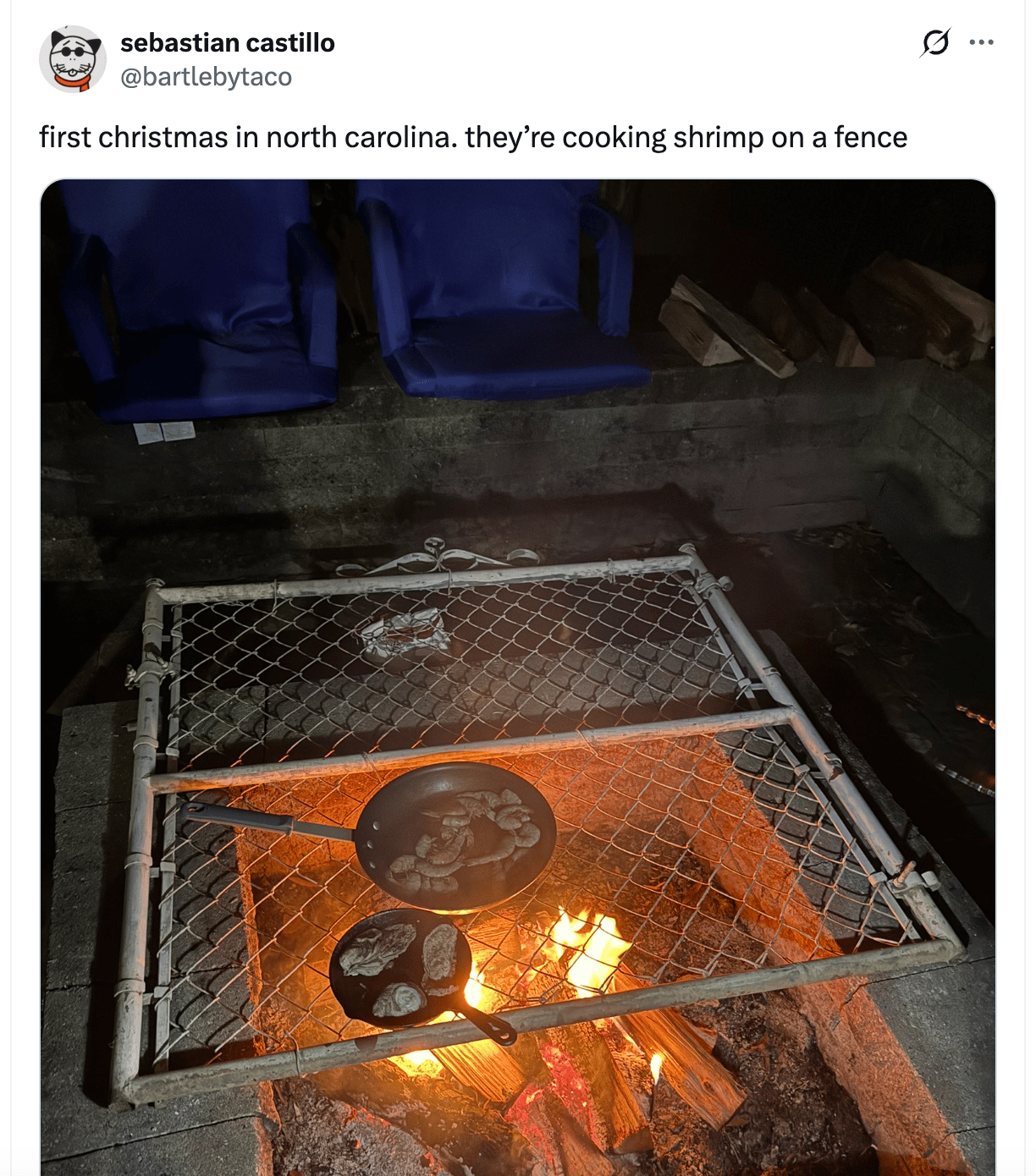

North Carolina Christmas

Some Stray Links

P.S. here’s a crab tureen.

***Any typos in this email are on purpose actually***