This week’s question comes to us from Anthea Tawia:

I came to San Francisco to visit the company I work for—sadly I was let go the first day I visited the office. I’m early in my career, worked there about 5ish months and really felt this was my big break. It’s disheartening to find yourself searching again after thinking you found what you’re looking for. How do you face a future that feels so uncertain?

First of all, I’m incredibly sorry this happened to you. It’s unforgivably brutal.

Secondly, I fear you’re about to be deeply disappointed in my answer because there is no easy answer to what you—and so many other people—are currently going through. I also refuse to engage in the type of toxic positivity that would tell you this is a good learning experience, or that it will all be alright. Because the former is a straight-up lie and the latter is questionable at best.

I’m sure other people have told you this, but it bears repeating not just for you but for anyone else reading this who might’ve recently lost their job: It’s not your fault, and there is nothing you could’ve done differently to change the outcome.

I’ll say it again: It’s not your fault, and there is nothing you could’ve done differently to change the outcome.

I’m assuming, just playing the odds here, that you work in tech. Probably in some design or design-adjacent capacity.

My own design and tech journey started under very different circumstances, at a very different time. I accidentally joined up almost at the very beginning, when things were more driven by curiosity than profit motives. And by “accidentally,” I mean that I had no clue this could be a career. None of us did. We had no idea what it was that we were making or how it would slot into the world, but either through naivete, hubris, prescience, or a combination of the three we felt this was something important, and overall positive. Words like “democratization,” “netizens,” and “new economy” were being thrown around with wild abandon. We were right about some things. It did change the world, but that was a massive monkey’s paw. We were wrong about other things. It was not overall positive. And we were ignorant about more things than we were right or wrong about combined. But by and large, there was the sense that the industry was attempting, if not always succeeding and certainly mired in the biases of white men, to make the world a better place.

It did feel, for at least a while, that we were doing a fair bit of solving problems, and making things that people enjoyed. Online banking and bill paying is nice. The iPod was cool. Cue cat? Very cool. Social media could’ve worked, maybe with different people in charge. 3D printers are cool. (I’ve made about 500 anti-ICE whistles this week!) Video cams were cool when we were pointing them at coffee pots and not our neighbors. Borrowing books from the library on an e-reader? Amazing.

Eventually, the industry did well enough to make a lot of people accidentally rich, which attracted people who were now expecting to get rich, and as with every new industry that eventually matures and goes into maintenance mode, a group of people who were pissed off they weren’t getting rich as quickly as the last group. And that’s when the problems that we were trying to solve switched from “what do people need” to “I’m not rich yet.” And eventually to “Ok, I’m richer than I ever need to be, but there is still some money, land, and water, over there that I want.”

But when I think back to myself at the start of my career, and the things that pulled me towards this industry, I don’t see myself making that same decision if I were making it now. This is not a place of honor.

As Pavel Samsonov so clearly and succinctly said recently on Bluesky: “Once, technology solved problems. People liked having problems solved, so they liked technology. Tech execs started to think of that sentiment as their due. So when they stopped solving problems, and people stopped liking them, they became outraged. ‘How dare you not love whatever we give you?’”

We do not love the surveillance. We do not love the slop. We do like paying rent and going to the doctor though. So we keep trying to do the work.

I think we need to open our eyes to the fact that the current industry many of us work in, not only doesn’t care about their workers, it actively resents them. In their eyes, we have gone from being the people who made things possible, to an unnecessary burden on the bottom line.

They hate that we charge money for our labor, and see that money as something we are stealing from their pockets. Which are very large, are already more full than they will ever be able to use in a lifetime.

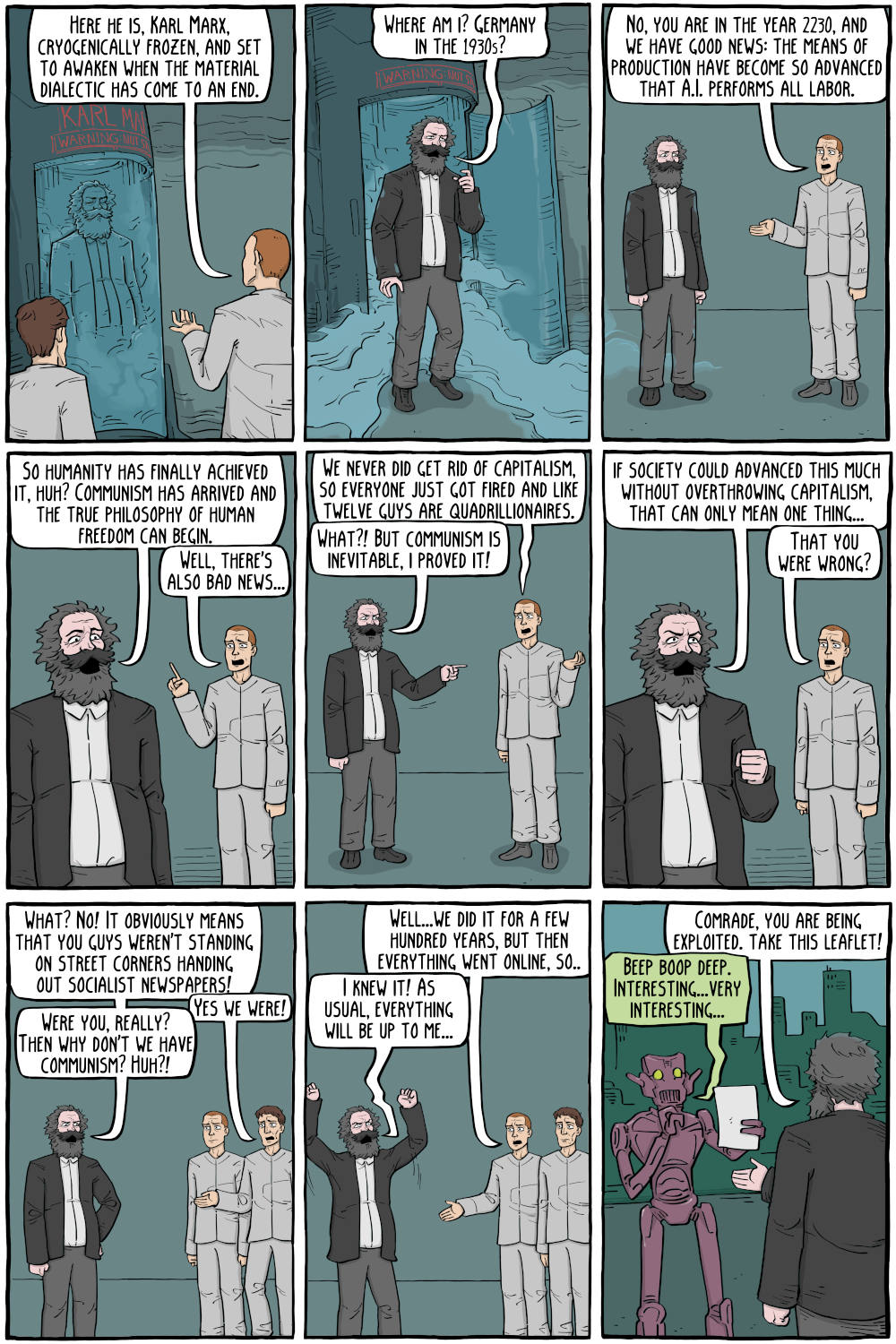

They don’t want you to improve, they don’t want you to be more productive, they don’t even want you to be more pliable. They just want you gone. As I was writing this sentence I got an alert from a friend that Block, Jack Dorsey’s latest chewtoy, has laid off 4,000 people. Which made its stock rise 24%. When 4,000 people lose their livelihood, their ability to pay their rent, their ability to go to the doctor, their ability to look out for their children, and the system that we live under cheers that on… That system needs to be destroyed. Capitalism sees workers as a bug, not a feature. They are dying to eliminate us. Capitalism is not a system of honor.

If you are looking for a sliver of positivity in those last few paragraphs, it might be that five months into your career you still have time to walk back out and try another door. I realize that this is neither easy to do, nor uplifting, nor the answer you were looking for. But I feel like I am duty-bound to tell this industry is not a place of honor.

CEOs are handing out lucite to fascists. Companies are building massive surveillance networks to make kidnapping our neighbors easier. The world’s richest idiot has turned Twitter into a child pornography factory. VC firms are hiring partners whose only job qualification is that they’ve murdered Black people on the subway. And an industry that senses its own decline is now stuffing every product and service we’ve adopted with slop like slop is the only lifesaver left on the Titanic.

To make it even more depressing, as if that were necessary, when I look out at the evil bastards doing these things, celebrating the murder of my neighbors, I see many of the same faces who were talking about tech as a force for good when I first started. I see many of the same faces who were posting letters about the importance of diversity to their corporate sites in 2020. I see many of the same faces who promised to “do better,” after the brutal murder of George Floyd now calling for a bigger ICE presence on our streets. These are not people of honor.

Anthea, I am so far away from answering your question. So here I will attempt to do so, but I fear that you will not like my answer. However, I have too much respect for you to lie. Your last sentence was about facing a future that feels so uncertain. But I feel like a career in tech at this point is far from uncertain. In fact some things are more certain than uncertain. You would most likely be working for someone who doesn’t value you. You’d most likely be building something that is not improving the world. You’d most likely be forced to either spend your time babysitting the slop machine (and secretly fixing its mistakes) or actively working on the slop machine (and secretly pretending it was not full of mistakes). You’d be surrounded by over-eager 9-9-6 techbros who feel they’re temporarily embarrassed millionaires who don’t want the boot off workers’ necks as much as they envision their own foot inside the boot.

I know none of this feels good to hear. But I don’t think this industry deserves you. You deserve to work at a place that treats you with respect. A place that gives you the room to learn and grow, while cherishing the expertise you’re already walking through the door with. A place that pays you well and provides medical benefits for you and your family. A place with set hours, so you can flourish outside of work as well. A place where your opinion is not only welcome, but encouraged. A place where you feel safe. A place where you and the other workers can collectively bargain with management, because they understand that you are where the value comes from. I say these things not because you are special—although I’m sure you are!—but because all workers deserve these things.

Sadly, I fear those things are close to impossible to find in the tech industry at the moment. As you found out from direct experience. So I’d suggest asking yourself what you would be doing right now, if not for tech. Not because you don’t deserve to be here, but because it doesn’t deserve you.

If you decide to persevere in this industry, I’d walk into every interview with your head held high and remember that you are interviewing them, as much as they are interviewing you. You are deciding whether this is a company you want to sell your labor to. And I wouldn’t hesitate to walk out of any interview where you didn’t feel safe or valued. I’d walk the floor. I’d talk to other workers. (Outside work if possible.) I’d read up about the company. And I’d walk into that interview like I was doing a deposition. And of course, remember that none of this is a guarantee that they wouldn’t pull the same shit as your last company.

There is an uncertain future in front of you. And for that I am very sorry. Again, none of this was your fault, and there is nothing you could have done to keep it from happening. But in that uncertain future you may start finding things that you didn’t expect to find, maybe behind doors that you’d previously closed off. And maybe, hopefully, one of those will lead to a life full of joy and love. Keep in mind that a job will take up a huge percentage of your life, and this is not a practice life. It deserves to be amazing, not spent working for assholes. Life is wild, it doesn’t always go where you’re expecting it to. Shit sucks. Shit is unfair. Shit is brutal. Shit also makes strawberries. And whatever else we may do with our lives, we are all, at heart, strawberry farmers.

And for any tech leaders who are reading this and believe themselves the exception? Great. Show me. Instead of sending me the “not all tech leaders” email you’re already crafting in your head… do something to prove to us that it’s “not all tech leaders.” Take a stand. Call out your brethren. Refuse to work with Nazis. Stop licensing your software to Nazis. Start treating your workers with the respect they deserve. Every dollar in your very large pockets came from their labor. Share it.

And if you are running a company that respects its workers, and treats them honestly, and fairly? Hire Anthea. She seems awesome. You’d be lucky to have her.

📓 Some book news: If you’ve pre-ordered the new book, How to die (and other stories), thank you! I originally planned on getting all the pre-orders shipped out in February, but my printer has been sloooooooooow in getting books to me. Some orders have gone out. And there is a large shipment headed my way. I am sending books out in the order I received them. Fun fact: I asked a friend who works at a bookstore why things were slow and he told me every press in America is swamped right now, printing Heated Rivalry. Which honestly? I can’t get mad about that. I appreciate your patience. I love you.

🙋 Got a question? Ask it. I might meander myself into a useful answer.

💰 Join the $2 Lunch Club and help me pay my rent.

📣 The next Presenting w/Confidence workshop is scheduled for March 19 & 20. It’s a good workshop for folks interviewing.

🏳️⚧️ This week we are funneling all our help to the Trans Continental Pipeline. They’re busy getting trans people the fuck out of Kansas, and into Colorado. Because, yes, it’s come to this. Fuck these fascists.