Trump has found an aesthetic to define his second term: grotesque AI slop.

Over the weekend, the Trump administration posted at least seven different pieces of AI generated or AI altered media, ranging from Trump imagining himself as a pope and a Star Wars Jedi (or Sith?) to Obama-esque “Hope” posters featuring people the administration has deported.

This has become the Slop Presidency, and AI-generated images are the perfect artistic medium for the Trump presidency. They're impulsively created, grotesque, and low-effort. Trumpworld’s fascination with slop is the logical next step for a President that, in his first term, regularly retweeted random memes created by his army of supporters on Discord or The Donald, a subreddit that ultimately became a Reddit-clone website after it was banned. AI allows his team to create media that would never exist otherwise, a particularly useful tool for a President and administration that has a hostile relationship with reality.

Trump’s original fascination with AI slop began last summer, after he said legal Haitian immigrants in Springfield, Ohio were “eating the cats…they’re eating the pets” in his debate with Kamala Harris. The internet’s AI slop factories began spinning up images of Trump as cat-and-dog savior. Since then, Trump and the administration have occasionally shared or reposted AI slop. In his first week in office, Trump shared an AI-generated “GM” car image that was promoting $TRUMP coin. “What a beautiful car. Congrats to GM!,” he posted.

At the end of February, Trump shared a video on his Truth Social account that imagined a world where Gaza was turned into a Trump Casino.

But this weekend, Trump began sharing AI slop on a level we’ve not seen before.

Trump’s AI-tinged weekend began on Friday night with a photo-realistic picture of himself as the Pope on his Truth Social account. The White House reposted a screenshot of the image on X, which pissed off the Catholic Church.

“This is deeply offensive to Catholics especially during this sacred time that we are still mourning the death of Pope Francis and praying for the guidance of the Holy Spirit for the election of our new Pope. He owes an apology,” Thomas Paprocki, an American Bishop in Illinois, said on X.

During a press conference on Monday, Trump dismissed the accusation that the Trump Pope was offensive and then said he didn’t post it. “The Catholics loved it. I had nothing to do with it,” Trump said. “Somebody made up a picture of me dressed like the Pope and they put it out on the internet,” Trump said. “That’s not me that did it, I have no idea where it came from. Maybe it was AI. But I know nothing about it. I just saw it last evening.”

All political movements are accompanied by artists who translate the politics into pictures, writing, and music. Adolf Ziegler captured the Nazi ideal in paintings. Stalin’s Soviet Union churned out mass produced and striking propaganda posters that wanted citizens about how to live. The MAGA movement’s artistic aesthetic is AI slop and Donald Trump is its king. It is not concerned with convincing anyone or using art to inform people about its movement. It seeks only to upset people who aren’t on board and excite the faithful because it upsets people.

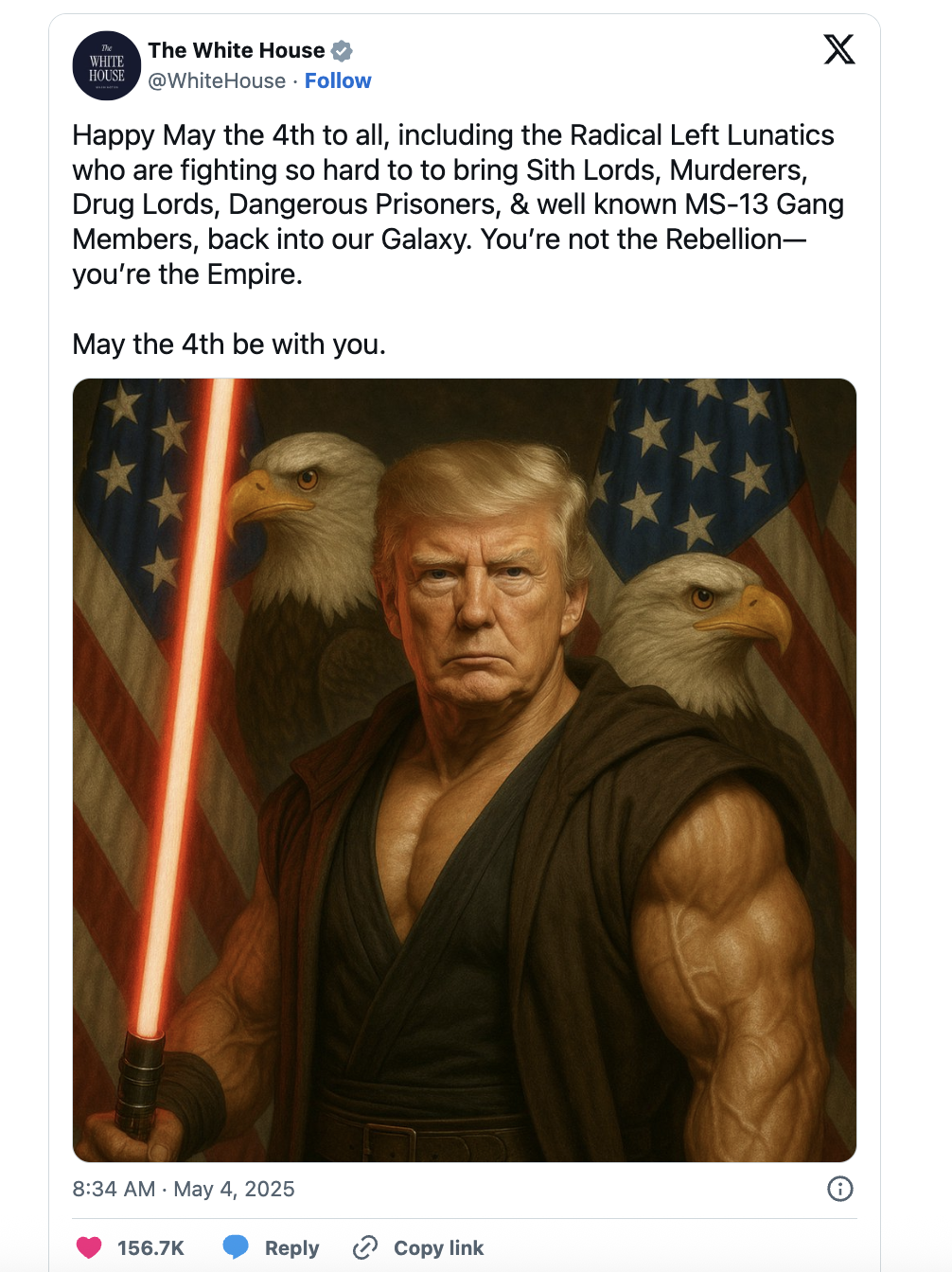

Not content to just aggravate Catholics, the Trump administration then used AI to offend adherents of another of America’s major religions: Star Wars fans. On May the 4th, the official White House X account posted an AI-generated image of a muscle bound Trump wielding a red light saber and flanked by two bald eagles.

“Happy May the 4th to all, including the Radical Left Lunatics who are fighting so hard to to [sic] bring Sith Lords, Murderers, Drug Lords, Dangerous Prisoners, & well known MS-13 Gang Members, back into our Galaxy. You’re not the Rebellion—you’re the Empire,” it said in the post. “May the 4th be with you.” As the replies pointed out, red light sabers are typically used by villains.

This was just one of a series of Star Wars related AI-generated cringe that went out from official Trump admin accounts over the weekend. DOD Rapid Response on X (an account that publishes propaganda on behalf of Secretary of Defense Pete Hegseth) posted a five minute video that contained a Star Wars intro style scroll of Trump’s “accomplishments” before treating viewers to a pic of Trump and Hegseth as Jedi. The account for the U.S. Army’s Pacific Command sent out an “AI-enhanced” image of soldiers doing a training exercise. Both of the soldiers’s weapons were replaced with lightsabers.

Trump and the people who created AI-image generators do not respect artists. There is no style that either will not exploit or sully. OpenAI reduced Hayao Miyazaki's life’s work to a gross meme, and the White House played along. For years the Lofi Girl has sat in windows on screens across the planet while people studied, read, and worked. Over the weekend the White House YouTube channel ran “Lo-Fi MAGA Video to Relax/ Study To” while an animated President Trump sat at a desk, mimicking the Lofi Girl.

Maybe you don’t like Star Wars, are unmoved by Studio Ghibli films, or have never chilled to lofi beats. It doesn’t matter, the message is clear: if you love something Trump will pervert it. Nothing will be untouched. Sacred objects and beloved art exist only to be desecrated. AI has made that as easy as pushing a button.

AI generated slop content is part of a brute force attack on the algorithms that control reality and the Trump administration’s constant use of AI art reflects its own brute force attack on American democracy. It’s not just that its aesthetics are useful for Trump, its entire mode of being is useful for how his administration has governed so far, by brute forcing the Presidency with a slew of executive orders, budget cuts, attacks on institutions, and sloppily executed deportations. The strategy is to overwhelm the American bureaucracy and the legal system, and to exhaust his enemies with an endless stream of bullshit; by the time we shake out what’s legal and what’s not, much of the damage has already been done.

One of the wonderful things about making art is the process. A lot happens between conception and execution. An idea pops into an artist's head and it changes dramatically while they attempt to render that idea into reality. That doesn’t happen with AI-generated images. There is no creation process, there is only instant gratification. Whatever impulsive and grotesque thought pops into the mind of the creator can immediately be realized.

And so every revenge fantasy Trump and his followers ever wanted can be made real at a moment’s notice. On March 27, the White House X account posted a Ghibli-style AI image of a crying woman being arrested by ICE.

Here is a real woman who has been accused of a crime, her image appropriated by the state and rendered into a cartoon. America has total power over this woman. Arrested for drug trafficking, her image has been plastered all over the internet. She’ll be deported. Not content with total control over her body and future, the administration has made her into a caricature and invited its followers to mock her online.